MPI-AMRVAC 3.0: Updates to an open-source simulation framework

![aa45359-22-fig4[1]](https://www.tech-cc.eu/tcc/wp-content/uploads/2023/12/aa45359-22-fig41-678x381.jpg)

Received: 2 November 2022 Accepted: 12 March 2023

Abstract

Context. Computational astrophysics nowadays routinely combines grid-adaptive capabilities with modern shock-capturing, high resolution spatio-temporal integration schemes in challenging multidimensional hydrodynamic and magnetohydrodynamic (MHD) simulations. A large, and still growing, body of community software exists, and we provide an update on recent developments within the open-source MPI-AMRVAC code.

Aims. Complete with online documentation, the MPI-AMRVAC 3.0 release includes several recently added equation sets and offers many options to explore and quantify the influence of implementation details. While showcasing this flexibility on a variety of hydro-dynamic and MHD tests, we document new modules of direct interest for state-of-the-art solar applications.

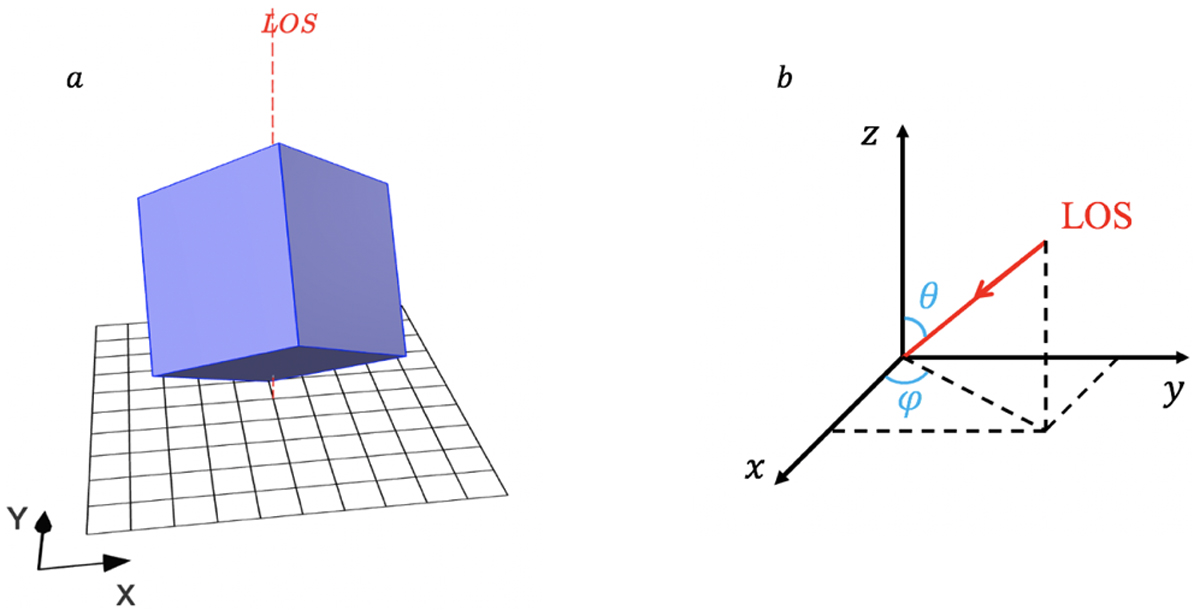

Methods. Test cases address how higher-order reconstruction strategies impact long-term simulations of shear layers, with and without gas-dust coupling effects, how runaway radiative losses can transit to intricate multi-temperature, multiphase dynamics, and how different flavors of spatio-temporal schemes and/or magnetic monopole control produce overall consistent MHD results in combination with adaptive meshes. We demonstrate the use of super-time-stepping strategies for specific parabolic terms and give details on all the implemented implicit-explicit integrators. A new magneto-frictional module can be used to compute force-free magnetic field configurations or for data-driven time-dependent evolutions, while the regularized-Biot-Savart-law approach can insert flux ropes in 3D domains. Synthetic observations of 3D MHD simulations can now be rendered on the fly, or in post-processing, in many spectral wavebands.

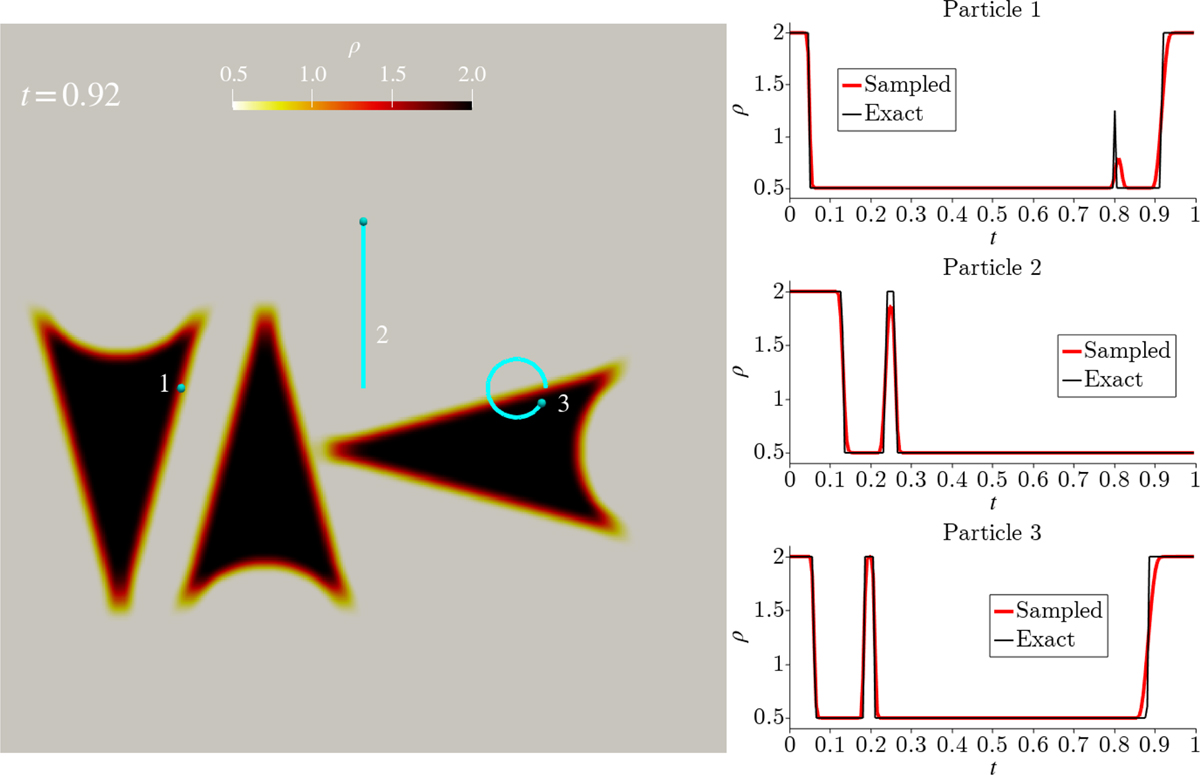

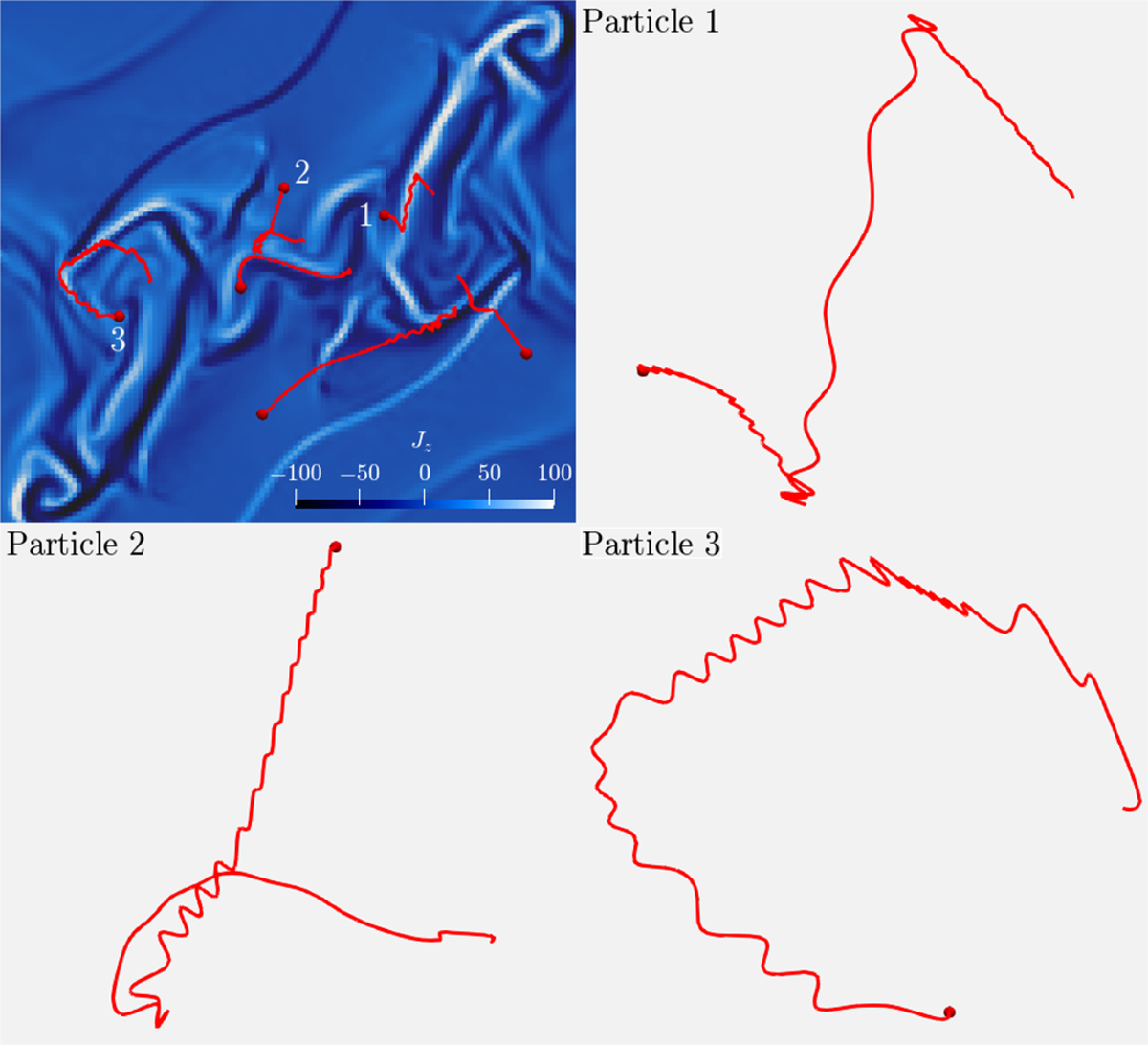

Results. A particle module as well as a generic field line tracing module, fully compatible with the hierarchical meshes, can be used to do anything from sampling information at prescribed locations, to following the dynamics of charged particles and realizing fully two-way coupled simulations between MHD setups and field-aligned nonthermal processes. We provide reproducible, fully demonstrated tests of all code functionalities.

Conclusions. While highlighting the latest additions and various technical aspects (e.g., reading in datacubes for initial or boundary conditions), our open-source strategy welcomes any further code usage, contribution, or spin-off development.

Key words: hydrodynamics / magnetohydrodynamics (MHD) / methods: numerical / Sun: corona

Movies associated to Figs. 1, 3–5, 8, 9, 11, 19, 20 are available at https://www.aanda.org

© The Authors 2023

Open Access article, published by EDP Sciences, under the terms of the Creative Commons Attribution License (https://creativecommons.org/licenses/by/4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

This article is published in open access under the Subscribe to Open model. Subscribe to A&A to support open access publication.

1 Introduction

Adaptive mesh refinement (AMR) is currently routinely available in many open-source, community-driven software efforts. The challenges associated with shock-dominated hydrodynamic (HD) simulations on hierarchically refined grids were already identified in the pioneering work by Berger & Colella (1989) and have since been carried over to generic frameworks targeting the handling of multiple systems of partial differential equations (PDEs). One such framework is the PARAMESH (MacNeice et al. 2000) package, which offers support for parallelized AMR on logically Cartesian meshes. Codes that inherited the PARAMESH AMR flexibility include the FLASH code, which started as pure hydro-AMR software for astrophysical applications (Fryxell et al. 2000). FLASH has since been used in countless studies, and a recent example includes its favorable comparison with an independent simulation study (Orban et al. 2022) that focused on modeling the challenging radiative-hydro behavior of a laboratory, laser-produced jet. PARAMESH has also been used in space-weather-related simulations in 3D ideal magnetohydrodynamics (MHD; Feng et al. 2012). For space weather applications, a similarly noteworthy forecasting framework employing AMR is discussed in Narechania et al. (2021), where Sun-to-Earth solar wind simulations in ideal MHD are validated. Another AMR package in active development is the CHOMBO library1, and this is how the PLUTO code (Mignone et al. 2012) inherits AMR functionality. Recent PLUTO additions showcase how dust particles can be handled using a hybrid particle-gas treatment (Mignone et al. 2019) and detail how novel nonlocal thermal equilibrium radiation hydro is performing (Colombo et al. 2019).

Various public-domain codes employ a native AMR implementation, such as the ENZO code (Bryan et al. 2014) or the RAMSES code, which started as an AMR-cosmological HD code (Teyssier 2002). In Astrobear (Cunningham et al. 2009), which is capable of using AMR on MHD simulations using constrained transport on the induction equation, the AMR functionality is known as the BEARCLAW package. Radiative MHD functionality for Astrobear, with a cooling function extending below 10000 K, was demonstrated in Hansen et al. (2018), who studied magnetized radiative shock waves. The AMR-MHD possibilities of NIRVANA have also been successfully increased (Ziegler 2005, 2008), and it has more recently added a chemistry-cooling module, described in Ziegler (2018). Another AMR-MHD code that pioneered the field was introduced as the BATS-R-US code (Powell et al. 1999), which is currently the main solver engine used in the space weather modeling framework described in Tóth et al. (2012). Its AMR functionality has been implemented in the block-adaptive-tree library (BATL), a Fortran-AMR implementation. This library shares various algorithmic details with the AMR implementation in MPI-AMRVAC, described in Keppens et al. (2012), whose 3.0 update forms the topic of this paper.

Meanwhile, various coding efforts anticipate the challenges posed by modern exascale high performance computing systems, such as that realized by the task-based parallelism now available in the Athena++ (Stone et al. 2020) effort. This code is in active use and development, with, for example, a recently added gasdust module (Huang & Bai 2022) that is similar to the gas-dust functionality available for MPI-AMRVAC (Porth et al. 2014). This paper documents the code’s novel options for implicit-explicit (IMEX) handling of various PDE systems and demonstrates its use for gas-dust coupling. GAMER-2, as presented in Schive et al. (2018), is yet another community effort that offers AMR and many physics modules, where graphics processing unit (GPU) acceleration in addition to hybrid OpenMP/MPI allows effective resolutions on the order of 10 0003. Even more visionary efforts in terms of adaptive simulations, where multiple physics modules may also be run concurrently on adaptive grid hierarchies, include the DISPATCH (Nordlund et al. 2018) and PATCHWORK (Shiokawa et al. 2018) frameworks. This paper serves to provide an updated account of the MPI-AMRVAC functionality. Future directions and potential links to ongoing new developments are provided in our closing discussion.

2 Open-source strategy with MPI-AMRVAC

With MPI-AMRVAC, we provide an open-source framework written in Fortran where parallelization is achieved by a (possibly hybrid OpenMP-) MPI implementation, where the block adaptive refinement strategy has evolved to the standard block-based quadtree-octree (2D-3D) organization. While originally used to evaluate efficiency gains affordable through AMR for multidimensional HD and MHD (Keppens et al. 2003), later applications focused on special relativistic HD and MHD settings (van der Holst et al. 2008; Keppens et al. 2012). Currently, the GitHub source version2 is deliberately handling Newtonian dynamics throughout, and we refer to its MPI-AMRVAC 1.0 version as documented in Porth et al. (2014), while an update to MPI-AMRVAC 2.0 is provided in Xia et al. (2018). A more recent guideline on the code usability to solve generic PDE systems (including reaction-diffusion models) is found in Keppens et al. (2021). Since MPI-AMRVAC 2.0, we have a modern library organization (using the code for 1D, 2D or 3D applications), have a growing number of automated regression tests in place, and provide a large number of tests or actual applications from published work under, for example, the tests/hd subfolder for all simulations using the hydro module src/hd. This ensures full compliance with all modern requirements on data reproducibility and data sharing.

Our open-source strategy already led to various noteworthy off-spins, where, for example, the AMR framework and its overall code organization got inherited to create completely new functionality: the Black Hole Accretion Code (BHAC3)from Porth et al. (2017; and its extensions; see, e.g., Bacchini et al. 2019; Olivares et al. 2019; Weih et al. 2020) realizes a modern general-relativistic MHD (GR-MHD) code, which was used in the GR-MHD code comparison project from Porth et al. (2019). In Ripperda et al. (2019a) the GR-MHD code BHAC got extended to handle GR-resistive MHD (GR-RMHD) where IMEX strategies handled stiff resistive source terms. We here document how various IMEX strategies can be used in Newtonian settings for MPI-AMRVAC 3.0. The hybrid OpenMP-MPI parallelization strategy was optimized for BHAC in Cielo et al. (2022), and we inherited much of this functionality within MPI-AMRVAC 3.0. Other, completely independent GR-MHD software efforts that derived from earlier MPI-AMRVAC variants include GR-AMRVAC by Meliani et al. (2016), the Gmunu code introduced in Cheong et al. (2021, 2022), or the NOVAs effort presented in Varniere et al. (2022).

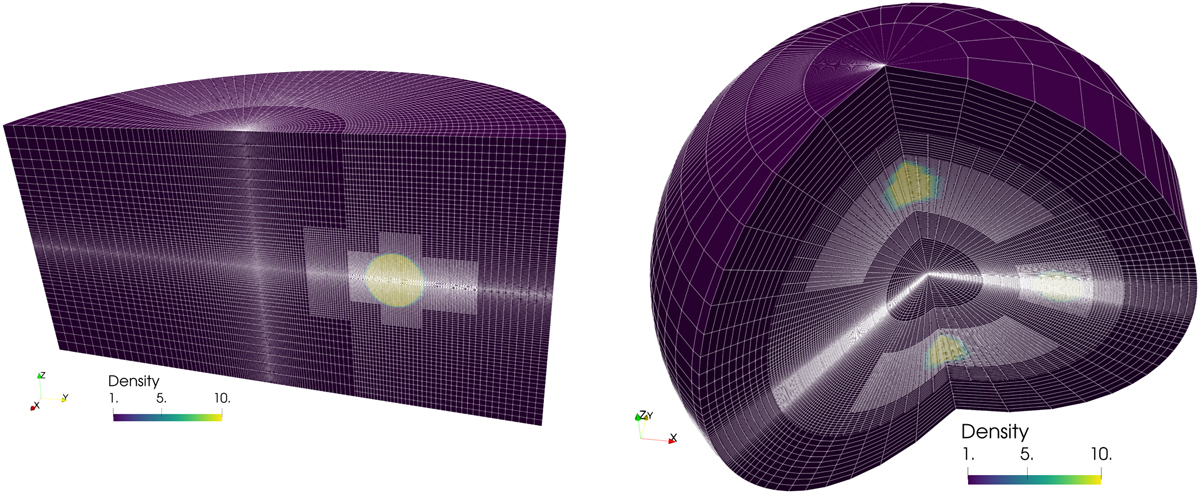

The code is also used in the most recent update to the space weather modeling effort EUHFORIA4, introduced in Pomoell & Poedts (2018). In the ICARUS5 variant presented by Verbeke et al. (2022), the most time-consuming aspect of the prediction pipeline is the 3D ideal MHD solver that uses extrapolated magnetogram data for solar coronal activity at 0.1 AU, to then advance the MHD equations till 2 AU, covering all 360° longitudes, within a ±60° latitude band. This represents a typical use-case of MPI-AMRVAC functionality, where the user can choose a preferred flux scheme, the limiters, the many ways to automatically (de)refine on weighted, user-chosen (derived) plasma quantities, while adopting the radial grid stretching introduced in Xia et al. (2018) in spherical coordinates. In what follows, we provide an overview of current MPI-AMRVAC 3.0 functionality that may be useful for future users, or for further spin-off developments.

3 Available PDE systems

The various PDE systems available in MPI-AMRVAC 3.0 are listed in Table 1. These cover a fair variety of PDE types (elliptic, parabolic, but with an emphasis toward hyperbolic PDEs), and it is noteworthy that almost all modules can be exploited in 1D to 3D setups. They are all fully compatible with AMR and can be combined with modules that can meaningfully be shared between the many PDE systems. Examples of such shared modules include

- the particle module in src/particle, which we briefly discuss in Sect. 7.1,

- the streamline and/or field line tracing module in src/modules/mod_trace_field.t as demonstrated in Sect. 7.2,

- additional physics in the form of source terms for the governing equations, such as src/physics/mod_radiative_cooling.t to handle optically thin radiative cooling effects (see also Sect. 4.1.3), or src/physics/mod_thermal_conduction.t for thermal conduction effects, src/physics/mod_viscosity.t for viscous problems.

Table 1 provides references related to module usage, while some general guidelines for adding new modules can be found in Keppens et al. (2021). These modules share the code-parallelism, the grid-adaptive capacities and the various time-stepping strategies, for example the IMEX schemes mentioned below in Sect. 5. In the next sections, we highlight novel additions to the framework, with an emphasis on multidimensional (M)HD settings. Adding a physics module to our open-source effort can follow the instructions in doc/addmodule.md and the info in doc/contributing.md to ensure that auto-testing is enforced. The code’s documentation has two components: (1) the markup documents collected in the doc folder, which appear as html files on the code website6; and (2) the inline source code documentation, which gets processed by Doxygen7 to deliver full dependency trees and documented online source code.

Table 1Equation sets available in MPI-AMRVAC 3.0.

4 Schemes and limiters for HD and MHD

Most MPI-AMRVAC applications employ a conservative finite volume type discretization, used in each sub-step of a multistage time-stepping scheme. This finite volume treatment, combined with suitable (e.g., doubly periodic or closed box) boundary conditions ensures conservation properties of mass, momentum and energy as demanded in pure HD or ideal MHD runs. Available explicit time-stepping schemes include (1) a one-step forward Euler, (2) two-step variants such as predictor-corrector (midpoint) and trapezoidal (Heun) schemes, and (3) higher-order, multistep schemes. Our default three-, four- and five-step time integration schemes fall into the strong stability preserving (SSP) Runge-Kutta schemes (Gottlieb 2005), indicated as SSPRK(s, p), which involve s stages while reaching temporal order p. In that sense, the two-step Heun variant is SSPRK(2,2). In Porth et al. (2014), we provided all details of the three-step SSPRK(3,3), four-step SSPRK(4,3) and the five-step SSPRK(5,4) schemes, ensuring third-, third-, and fourth-order temporal accuracy, respectively. Tables 2 and 3 provide an overview of the choices in time integrators as well as the available shock-capturing spatial discretization schemes for the HD and MHD systems. The IMEX schemes are further discussed in Sect. 5. We note that Porth et al. (2014) emphasized that, instead of the standard finite volume approach, MPI-AMRVAC also allows for high-order conservative finite difference strategies (in the mod_finite_difference.t module), but these will not be considered explicitly here. Having many choices for spatiotemporal discretization strategies allows one to select optimal combinations depending on available computation resources, or on robustness aspects when handling extreme differences in (magneto-)thermodynamical properties. The code allows a higher than second-order accuracy to be achieved on smooth problems. In Porth et al. (2014), where MPI-AMRVAC 1.0 was presented, we reported on setups that formally achieved up to fourth-order accuracy in space and time. Figure 7 in that paper quantifies this for a 3D circularly polarized Alfvén wave test, while in the present paper, Fig. 10 shows third-order accuracy on a 1.75D MHD problem involving ambipolar diffusion. The combined spatio-temporal order of accuracy reachable will very much depend on the problem at hand (discontinuity dominated or not), and on the chosen combination of flux schemes, reconstructions, and source term treatments.

The finite-volume spatial discretization approach in each sub-step computes fluxes at cell volume interfaces, updating conservative variables stored as cell-centered quantities representing volume averages; however, when using constrained transport for MHD, we also have cell-face magnetic field variables. We list in Table 3 the most common flux scheme choices for the HD and MHD systems. In the process where fluxes are evaluated at cell edges, a limited reconstruction strategy is used – usually on the primitive variables – where two sets of cell interface values are computed for each interface: one employing a reconstruction involving mostly left, and one involving mostly right cell neighbors. In what follows, we demonstrate some of the large variety of higher-order reconstruction strategies that have meanwhile been implemented in MPI-AMRVAC. For explicit time integration schemes applied to hyperbolic conservation laws, temporal and spatial steps are intricately linked by the Courant-Friedrichs-Lewy (CFL) stability constraint. Therefore, combining high-order time-stepping and higher-order spatial reconstructions is clearly of interest to resolve subtle details. Thereby, different flux scheme and reconstruction choices may be used on different AMR levels. We note that our AMR implementation is such that the maximum total number of cells that an AMR run can achieve is exactly equal to the maximum effective grid resolution, if the refinement criteria enforce the use of the finest level grid on the entire domain. Even when a transition to domain-filling turbulence occurs – where triggering finest level grids all over is likely to happen, a gain in using AMR versus a fixed resolution grid can be important, by cost-effectively computing a transient phase. In Keppens et al. (2003), we quantified these gains for typical HD and MHD problems, and reported on efficiency gains by factors of 5 to 20, with limited overhead by AMR. Timings related to AMR overhead, boundary conditions, I/O, and actual computing are reported by MPI-AMRVAC in the standard output channel. For the tests discussed below, this efficiency aspect can hence be verified by rerunning the demo setups provided.

Table 2Time integration methods in MPI-AMRVAC 3.0, as implemented in mod_advance.t.

Table 3Choices for the numerical flux functions in MPI-AMRVAC 3.0, as implemented in mod_finite_volume.t.

4.1 Hydrodynamic tests and applications

The three sections below contain a 1D Euler test case highlighting differences due to the employed reconstructions (Sect. 4.1.1), a 2D hydro test without and with gas-dust coupling (Sect. 4.1.2), and a 2D hydro test where optically thin radiative losses drive a runaway condensation and fragmentation (Sect. 4.1.3). We note that the hydrodynamic hd module of MPI-AMRVAC could also be used without solving explicitly for the total (i.e., internal plus kinetic) energy density evolution, in which case an isothermal or polytropic closure is assumed. Physical effects that can be activated easily include solving the equations in a rotating frame, adding viscosity, external gravity, thermal conduction and optically thin radiative losses.

4.1.1 TVD versus WENO reconstructions

Many of the implemented reconstruction and/or limiter choices in MPI-AMRVAC are briefly discussed in its online documentation8. These are used when doing reconstructions on (usually primitive) variables from cell center to cell edge values, where their reconstructed values quantify local fluxes (on both sides of the cell face). They mostly differ in whether or not they ensure (1) the total variation diminishing (TVD) property on scalar hyperbolic problems or rather build on the essentially non-oscillatory (ENO) paradigm, (2) encode symmetry preservation, and (3) achieve a certain theoretical order of accuracy (second-order or higher possibilities). Various reconstructions with limiters are designed purely for uniform grids, others are compatible with nonuniform grid stretching. In the mod_limiter.t module, one currently distinguishes many types as given in Table 4. The choice of limiter impacts the stencil of the method, and hence the number of ghost cells used for each grid block in the AMR structure, as listed in Table 4. In MPI-AMRVAC, the limiter (as well as the discretization scheme) can differ between AMR levels, where one may opt for a more diffusive (and usually more robust) variant at the highest AMR levels.

Notes. The formal order of accuracy (on smooth solutions), the needed number of ghost cells, and suitable references are indicated as well.

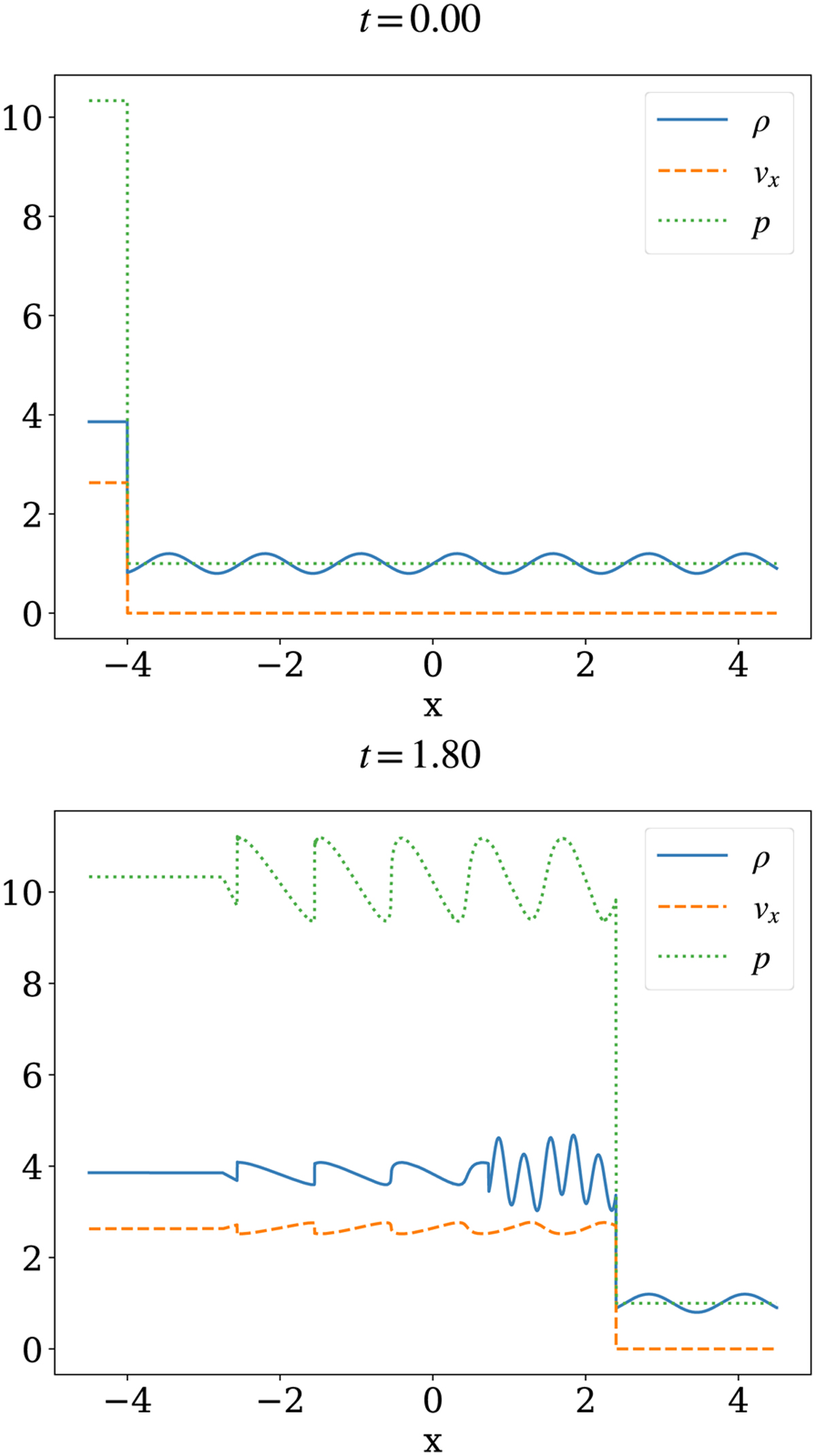

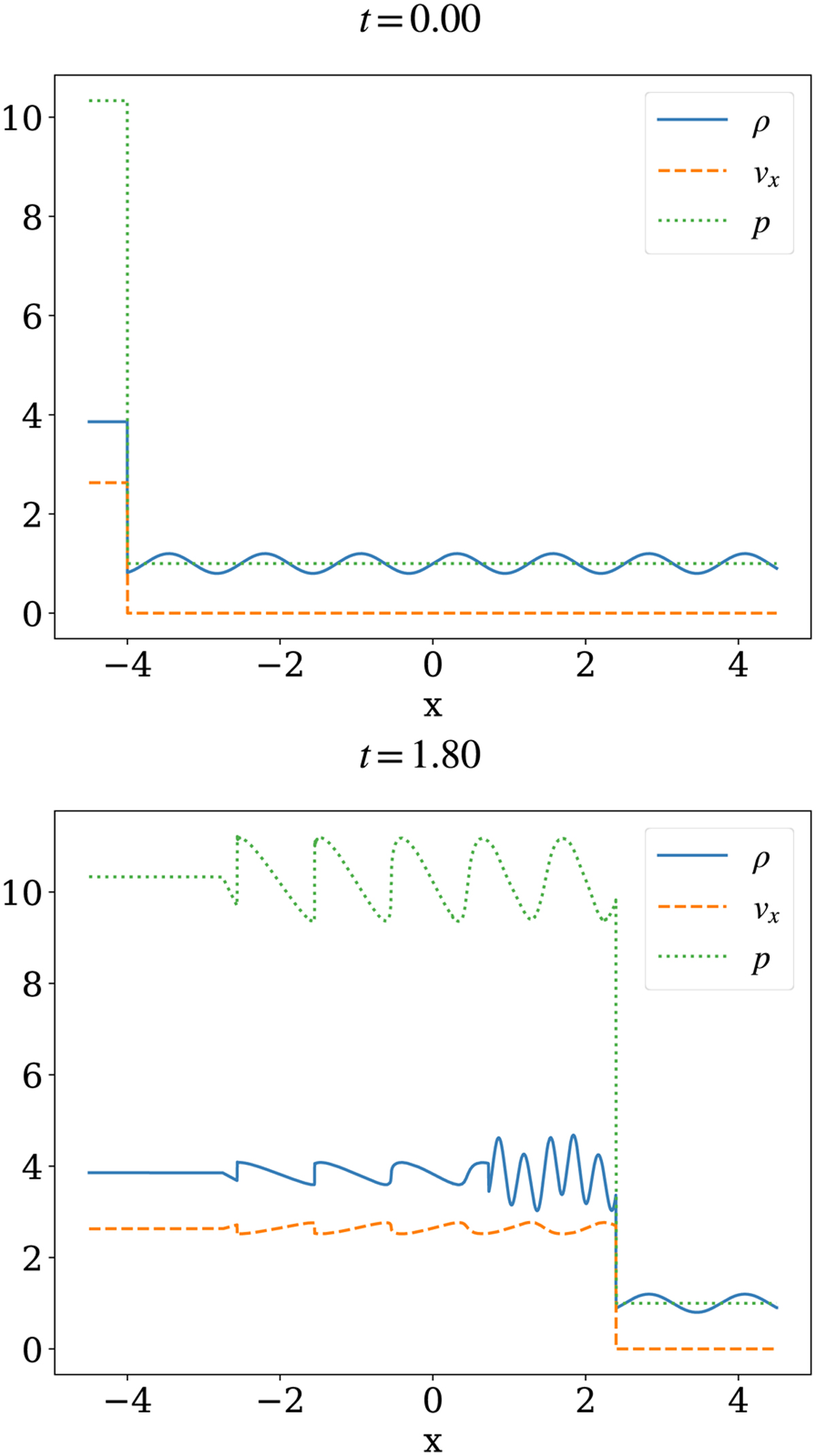

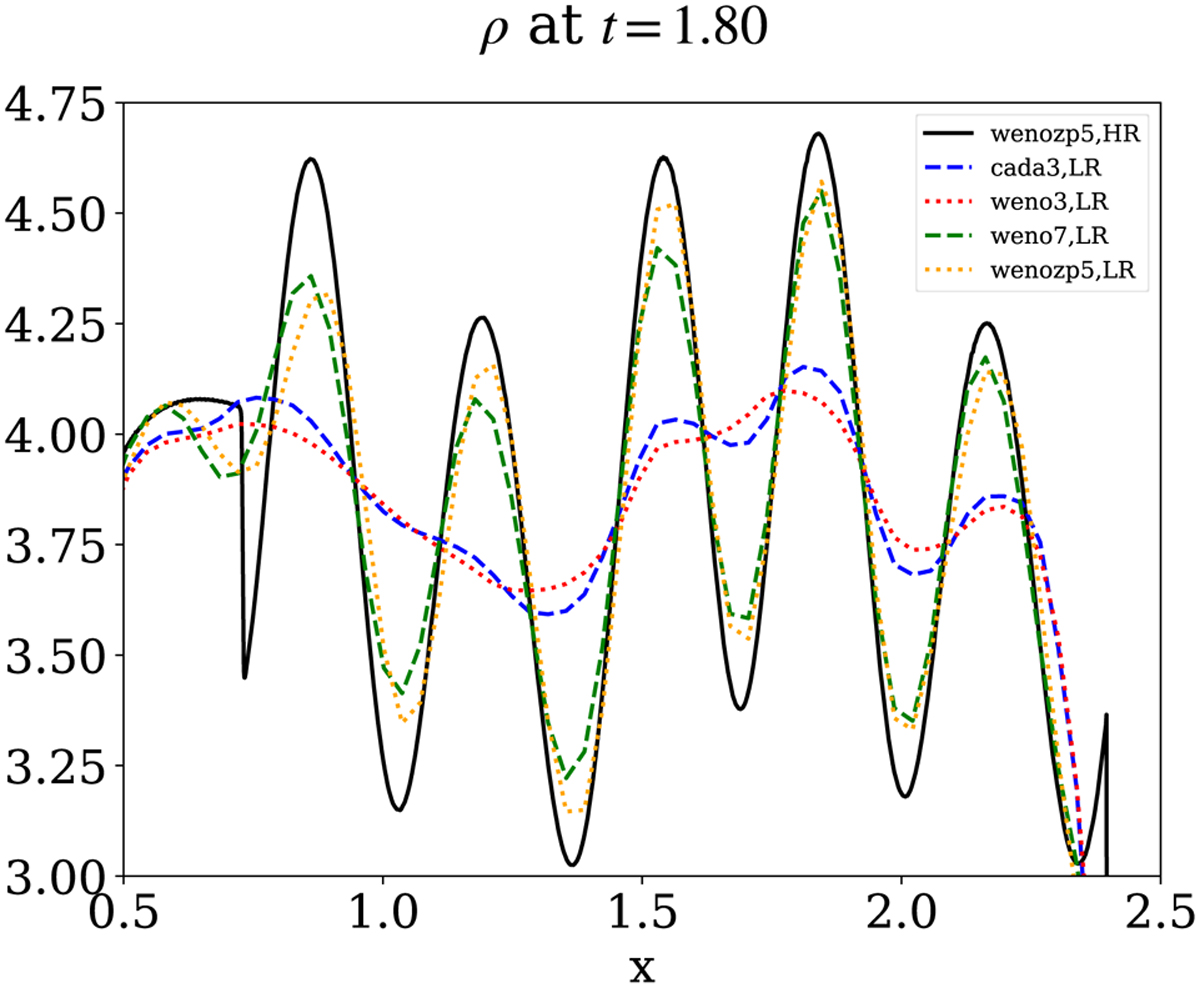

The option to use higher-order weighted ENO (WENO) reconstruction variants has been added recently, and here we show their higher-order advantage using the standard 1D HD test from Shu & Osher (1989). This was run on a 1D domain comprised between x = −4.5 and x = 4.5, and since it is 1D only, we compared uniform grid high resolution (25600 points) with low resolution (256 points) equivalents. This “low resolution” is inspired by actual full 3D setups, where it is typical to use several hundreds of grid cells per dimension. The initial condition in density, pressure and velocity is shown in Fig. 1, along with the final solution at t = 1.8. A shock initially situated at x = −4 impacts a sinusoidally varying density field with left and right states as in

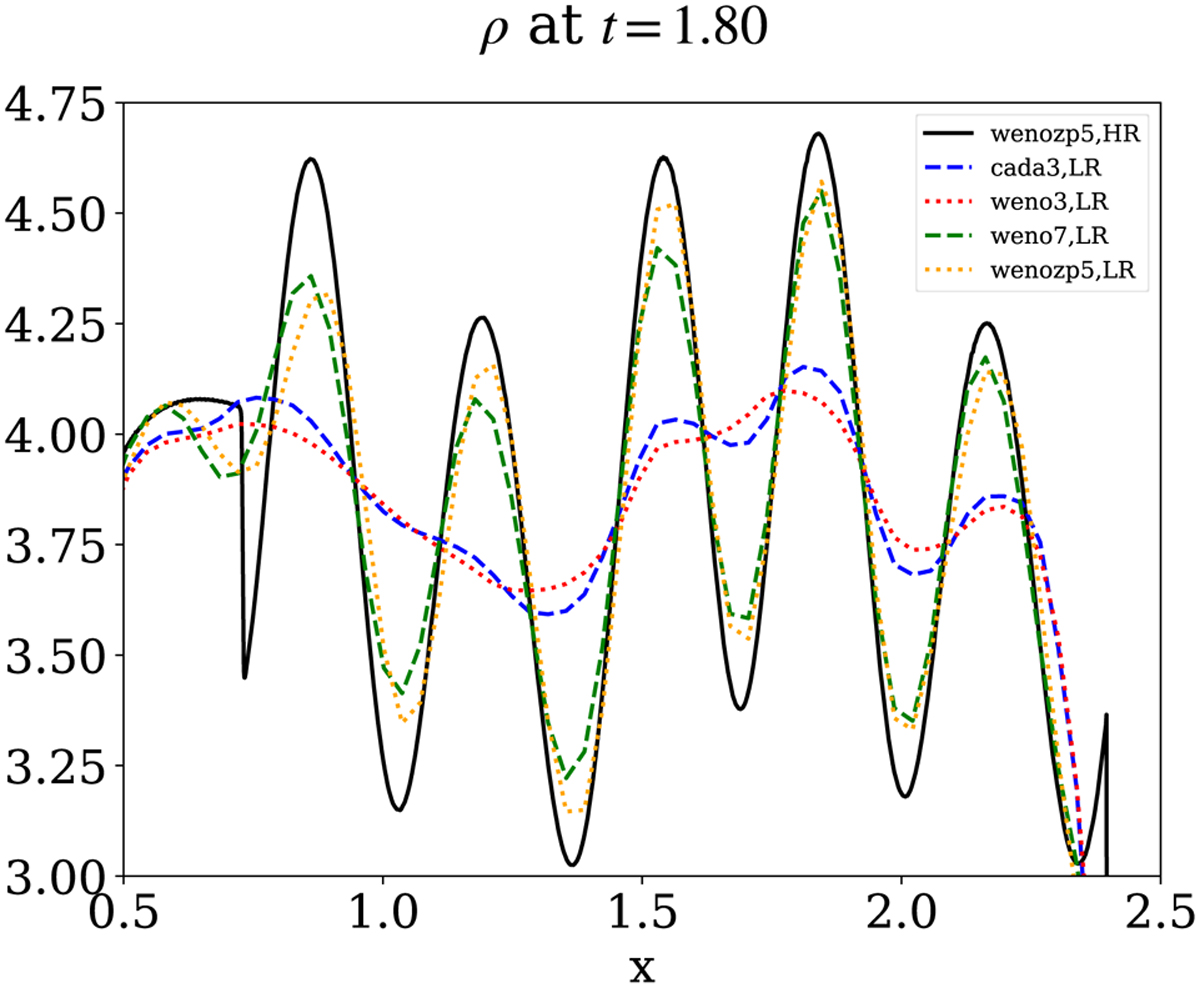

We used a Harten, Lax and van Leer (HLL) solver (Harten et al. 1983) in a three-step time integration, had zero gradient boundary conditions, and set the adiabatic index to γ = 1.4. In Fig. 2 we zoom in on the compressed density variation that trails the right-ward moving shock, where the fifth-order “wenozp5” limiter from Acker et al. (2016) is exploited in both high and low resolution. For comparison, low resolution third-order “cada3” (Čiada & Torrilhon 2009), third-order “weno3,” and seventh-order “weno7” (Balsara & Shu 2000) results show the expected behavior where higher-order variants improve the numerical representation of the shock-compressed wave train9.

Table 4Reconstructions with limiter choices in MPI-AMRVAC 3.0, as typically used in the cell-center-to-cell-face reconstructions.

4.1.2 2D Kelvin-Helmholtz: Gas and gas-dust coupling

The Kelvin-Helmholtz (KH) instability is ubiquitous in fluids, gases, and plasmas, and can cause intricate mixing. We here adopt a setup10 used in a recent study of KH-associated ionneutral de-couplings by Hillier (2019), where a reference high resolution HD run was introduced as well. We emphasize the effects of limiters in multidimensional hydro studies, by running the same setup twice, switching only the limiter exploited. We also demonstrate that MPI-AMRVAC can equally study the same processes in gas-dust mixtures, which is relevant, for example, in protoplanetary disk contexts.

2D KH and limiters

The domain (x, y) ∈ [−1.5, 1.5] × [−0.75, 0.75] uses a base resolution of 128×64 with 6 levels of refinement, and hence we achieve 4096×2048 effective resolution. This should be compared to the uniform grids used in Hillier (2019), usually at 2048×1024, but with one extreme run at 16384×8192. Their Fig. 1 shows the density field at a very late time (t = 50) in the evolution where multiple mergers and coalescence events between adjacent vortices led to large-scale vortices of half the box width, accompanied by clearly turbulent smaller-scale structures. The setup uses a sharp interface at y = 0, with

where ΔV = 0.2, together with a uniform gas pressure p0 = 1/γ where γ = 5/3. The vertical velocity is seeded by white noise with amplitude 10−3. However, the two runs discussed here use the exact same initial condition: the t = 0 data are first generated using a noise realization and this is used for both simulations. This demonstrates at the same time the code flexibility to restart from previously generated data files, needed to, for example, resume a run from a chosen snapshot, which can even be done on a different platform, using a different compiler. We note that the setup here uses a discontinuous interface at t = 0, which is known to influence and preselect grid-scale fine-structure in the overall nonlinear simulations. Lecoanet et al. (2016) discussed how smooth initial variations can lead to reproducible KH behavior (including viscosity), allowing convergence aspects to be quantified. This is not possible with the current setup, but one can adjust this setup to the Lecoanet et al. (2016) configuration and activate viscosity source terms.

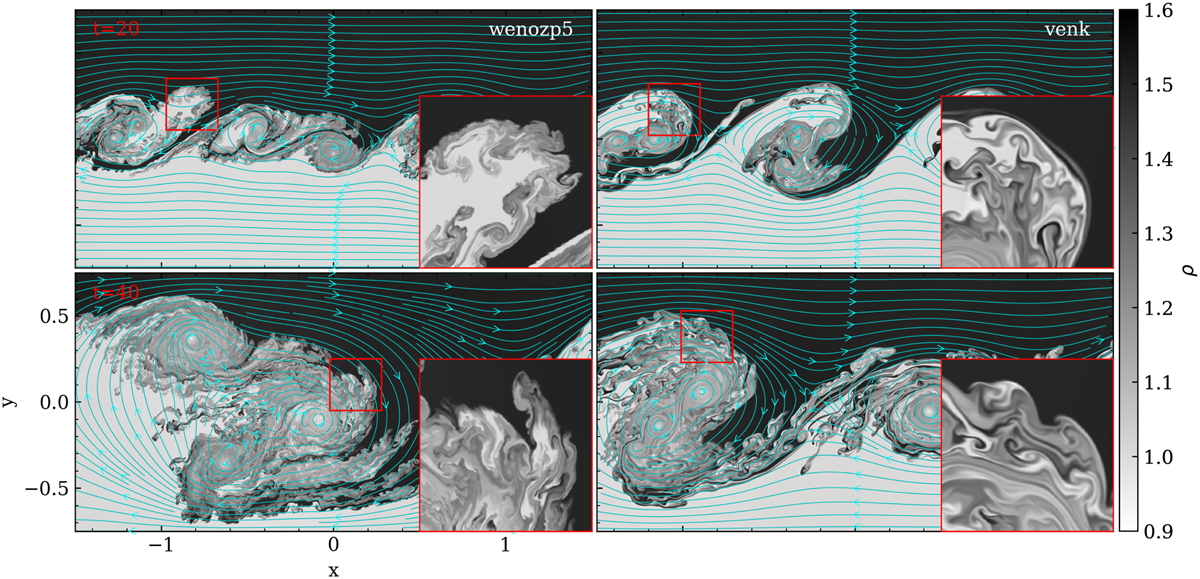

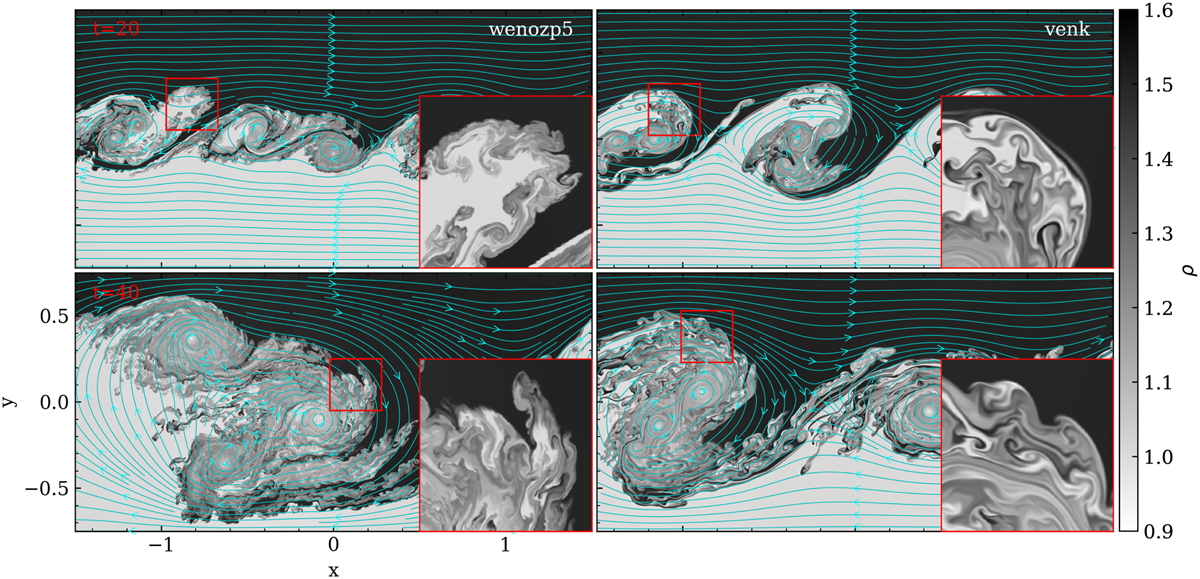

We use a three-step time integrator, with periodic sides and closed up/down boundaries (the latter ensured by (a)symmetry conditions). We use the HLLC scheme (see the review by Toro 2019), known to improve the baseline HLL scheme (Harten et al. 1983) in the numerical handling of density discontinuities. In Fig. 3, we contrast two runs at times t = 20, 40 that only differ in the limiter exploited, the left column again uses the wenozp5 limiter (Acker et al. 2016), while at right the Venkatakrishnan (Venkatakrishnan 1995) limiter is used, which is a popular limiter on unstructured meshes. While both runs start from the same t = 0 data, it is clear how the nonlinear processes at play in KH mixing ultimately lead to qualitatively similar, but quantitatively very different evolutions. The limiter is activated from the very beginning due to the sharp interface setup, and the simulation accumulates differences at each timestep. We note that the wenozp5 run (left panels) clearly shows much more pronounced finer-scale structure than the “venk” run (right panels). Since the setup is using a discontinuous initial condition, some of the fine-structure is not necessarily physical (Lecoanet et al. 2016). If statistical properties specific to the turbulent substructures are of interest, one should exploit the higher-order reconstructions, and perform multiple runs at varying effective resolution to fully appreciate physical versus numerical effects. We note that we did not (need to) include any hyper-diffusive terms or treatments here.

|

Fig. 1 ID Shu-Osher test. Shown are the density (solid blue line), velocity (dashed orange line), and pressure (dotted green line) for the initial time (top panel) and the final time (bottom panel). This high resolution numerical solution was obtained using the wenozp5 limiter. An animation is provided online. |

|

Fig. 2 ID Shu-Osher test. Comparison at final time t = 1.8 between different types of limiters at low resolution (LR) to the reference high resolution (HR) using the wenozp5 limiter (solid black) is shown. We zoom in on the density variation for x-axis values between 0.5 and 2.5 and ρ-values between 3 and 4.75. |

Gas-Dust KH evolutions

The HD module of MPI-AMRVAC provides the option to simulate drag-coupled gas-dust mixtures, introducing a user-chosen added number of dust species nd that differ in their “particle” size. In fact, every dust species is treated as a pressureless fluid, adding its own continuity and momentum equation for density ρdi and momentum ρdivdi, where interaction from dust species i ∈ 1… nd is typically proportionate to the velocity difference (v − vdi), writing ν for the gas velocity. This was demonstrated and used in various gas-dust applications (van Marle et al. 2011; Meheut et al. 2012; Hendrix & Keppens 2014; Porth et al. 2014; Hendrix et al. 2015, 2016). The governing equations as implemented are found in Porth et al. (2014), along with a suite of gas-dust test cases. We note that the dust species do not interact with each other, they only interact with the gas.

We here showcase a new algorithmic improvement specific to the gas-dust system: the possibility to handle the drag-collisional terms for the momentum equations through an implicit update. Thus far, all previous MPI-AMRVAC gas-dust simulations used an explicit treatment for the coupling, implying that the (sometimes very stringent and erratic) explicit stopping time criterion could slow down a gas-dust simulation dramatically. For Athena++, Huang & Bai (2022) recently demonstrated the advantage of implicit solution strategies allowing extremely short stopping time cases to be handled. In MPI-AMRVAC 3.0, we now provide an implicit update option for the collisional

terms in the momentum equations:

where we denote the end result of any previous (explicit) substage with T, Tdi. Noting that when the collisional terms are linear – that is, when we have the drag force fdi = αiρρdi (vdi − v) with a constant αi − an analytic implicit update can be calculated as follows:

(4)

(4)

(5)

(5)

Although the above is exact for any number of dust species nd when using proper expansions for dk, nk, and nik, in practice we implemented all terms up to the second order in Δt, implying that the expressions used are exact for up to two species (and approximate

for higher numbers), where we have

where ∀i = 1..nd we have

while

Equations (3) can be written in a compact form, where the already explicitly updated variables T enter the implicit stage:

(9)

(9)

where

Following the point-implicit approach (see, e.g., Tóth et al. 2012), P(Un+1) is linearized in time after the explicit update,

(11)

(11)

The elements of the Jacobian matrix ∂P/∂U contain in our case only elements of the form αiρdiρ. After the explicit update, the densities have already the final values at stage n + 1. Therefore, when αi is constant, the linearization is actually exact, but when αi also depends on the velocity, the implicit update might be less accurate.

The update of the gas energy density (being the sum of internal energy density eint and kinetic energy density) due to the collisions is done in a similar way and includes the frictional heating term,

This is different from the previous implementation, which only considered the work done by the momentum collisional terms (see Eq. (21) in Porth et al. 2014), but this added frictional heating term is generally needed for energy conservation (Braginskii 1965). The implicit update strategy can then be exploited in any multistage IMEX scheme.

As a demonstration of its use, we now repeat the KH run from above with one added dust species, where the dust fluid represents a binned dust particle size of [5 (b−1/2 − a−1/2) / (b−5/2 − a−5/2)]1/2 where a = 5 nm and b = 250 nm. We augment the initial condition for the gas with a dust velocity set identical to that of the gas by vx0d = vx0, but no velocity perturbation in the y-direction. The dust density is smaller than the gas density with a larger density contrast below and above the interface, setting ρ0d = Δρd for y > 0, ρ0d = 0.1 Δρd for y ≤ 0 where Δρd = 0.01. The time integrator used is a three-step ARS3 IMEX scheme, described in Sect. 5.

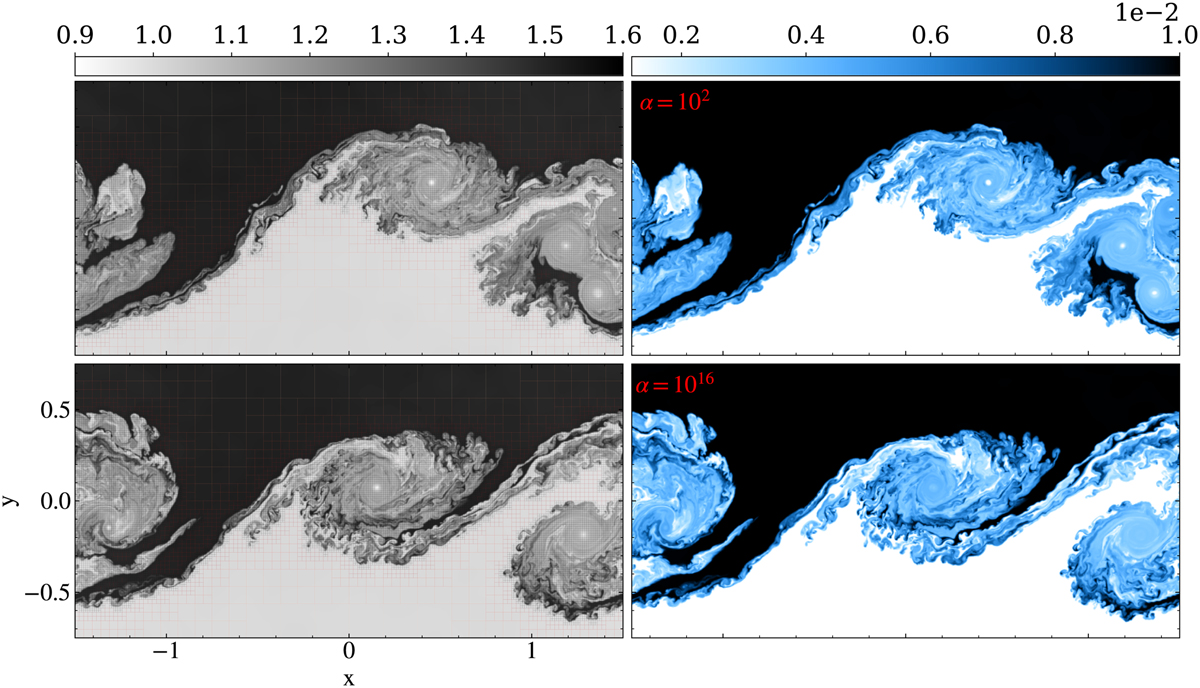

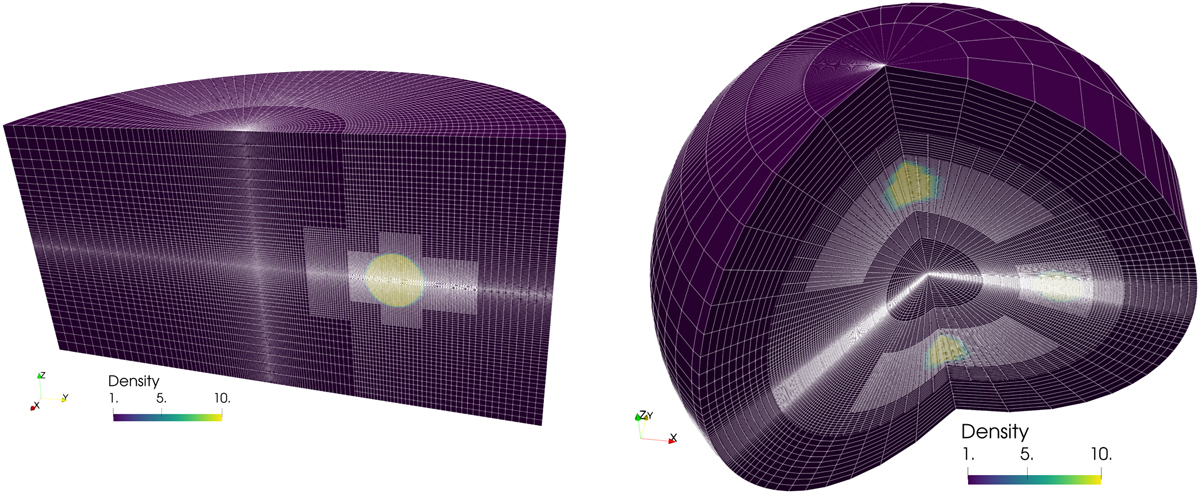

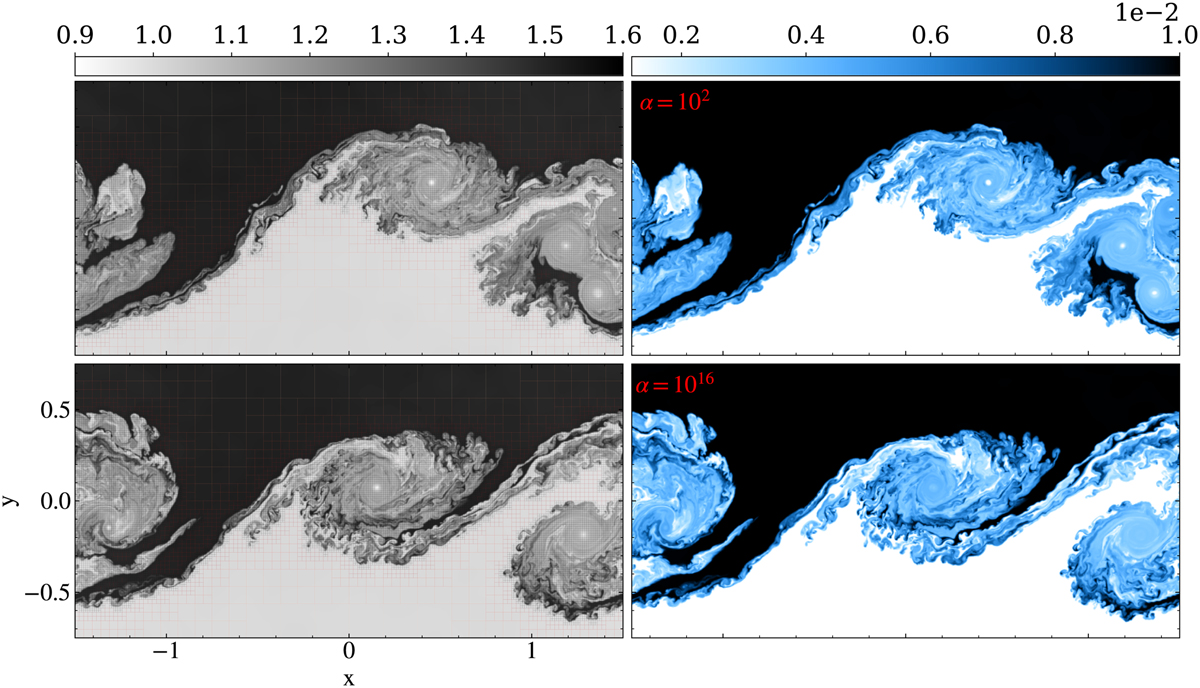

Results are shown in Fig. 4, for two different coupling regimes, which differ in the adopted constant coupling constant α, namely 100 and 1016. The associated explicit stopping time would scale with α−1, so larger α would imply very costly explicit in time simulations. Shown in Fig. 4 are the AMR grid structure in combination with the gas density variation at left (note that we here used different noise realizations at t = 0), as well as the single dust species density distribution at right, for t = 40. The density field for the dust shows similarly intricate fine-structure within the large-scale vortices that have evolved from multiple mergers. We used the same wenozp5 limiter as in the left panels of Fig. 3, and one may note how the gas dynamic vortex centers show clearly evacuated dust regions, consistent with the idealized KH gas-dust studies performed by Hendrix & Keppens (2014). The top versus bottom panels from Fig. 4 show that the AMR properly traces the regions of interest, the AMR criterion being based on density and temperature variables. The highly coupled case with α = 1016 can be argued to show more fine structure, as the collisions might have an effect similar to the diffusion for the scales smaller than the collisional mean free path (Popescu Braileanu et al. 2021). We note that Huang & Bai (2022) used corresponding α factors of 100 − 108 on their 2D KH test case, and did not investigate the very far nonlinear KH evolution we address here.

|

Fig. 3 Purely HD simulations of a 2D KH shear layer. The two runs start from the same initial condition and only deviate due to the use of two different limiters in the center-to-face reconstructions: wenozp5 (left column) and venk (right column). We show density views at times t = 20 (top row) and t= 40 (bottom row). The flow streamlines plotted here are computed by MPI-AMRVAC with its internal field line tracing functionality through the AMR hierarchy, as explained in Sect. 7.2. Insets show zoomed in views of the density variations in the red boxes, as indicated. An animation is provided online. |

|

Fig. 4 As in Fig. 3, but this time in a coupled gas-dust evolution, at time t = 40, with one species of dust experiencing linear drag. In the top row, αdrag = 102, and the bottom row shows a much stronger drag coupling, αdrag = 1016. Left column: gas density. Right column: Dust density. The limiter used was wenozp5. An animation is provided online. |

|

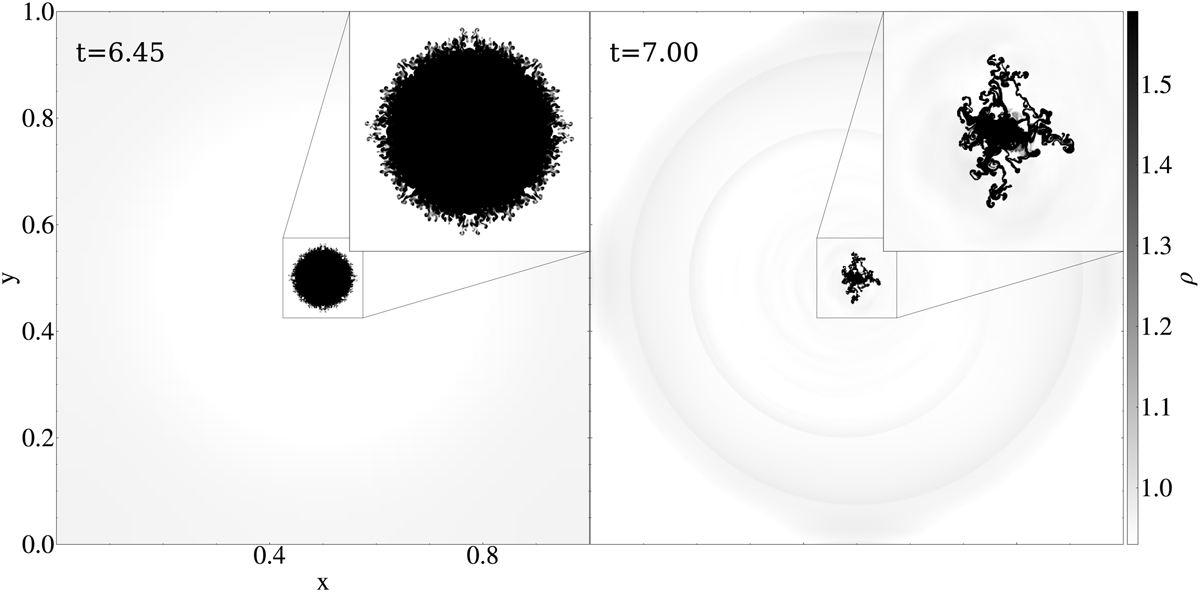

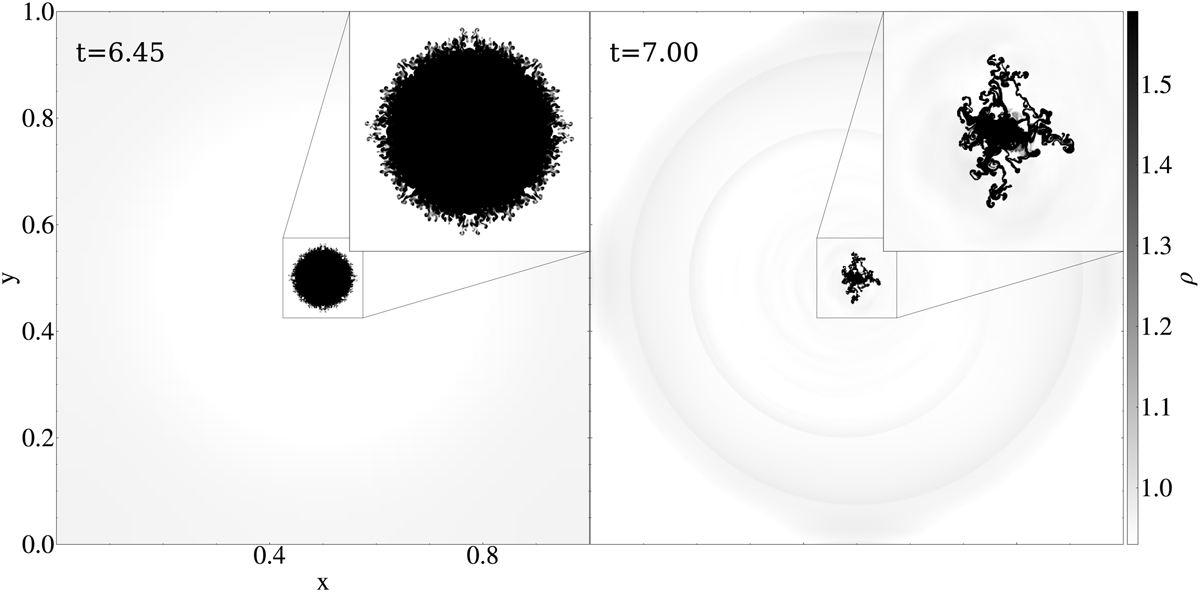

Fig. 52 D hydro runaway thermal condensation test. Density distributions are at times t = 6.45 (left) and t = 7 (right). The insets show zoomed-in views with more detail. An animation of this 2D hydro test is provided online. |

4.1.3 Thermally unstable evolutions

In many astrophysical contexts, one encounters complex multiphase physics, where cold and hot material coexist and interact. In solar physics, the million-degree hot corona is pervaded by cold (order 10000 K) condensations that appear as large-scale prominences or as more transient, smaller-scale coronal rain. Spontaneous in situ condensations can derive from optically thin radiative losses, and Hermans & Keppens (2021) investigated how the precise radiative loss prescription can influence the thermal instability process and its further nonlinear evolution in 2D magnetized settings. In practice, optically thin radiative losses can be handled by the addition of a localized energy sink term, depending on density and temperature, and MPI-AMRVAC provides a choice among 20 implemented cooling tables, as documented in the appendix of Hermans & Keppens (2021). The very same process of thermal instability, with its runaway condensation formation, is invoked for the so-called chaotic cold accretion (Gaspari et al. 2013) scenario onto black holes, or for the multiphase nature of winds and outflows in Active Galactic Nuclei (Waters et al. 2021), or for some of the fine-structure found in stellar wind-wind interaction zones (van Marle & Keppens 2012). Here, we introduce a new and reproducible test for handling thermal runaway in a 2D hydro setting. In van Marle & Keppens (2011), we inter-compared explicit to (semi)implicit ways for handling the localized source term, and confirmed the exact integration method of Townsend (2009) as a robust means to handle the extreme temperature-density variations that can be encountered. Using this method in combination with the SPEX_DM cooling curve Λ(T) (from Schure et al. 2009, combined with the low-temperature behavior as used by Dalgarno & McCray 1972), we set up a double-periodic unit square domain, resolved by a 64 × 64 base grid, and we allow for an additional 6 AMR levels. We use a five-step SSPRK(5,4) time integration, combined with the HLLC flux scheme, employing the wenozp5 limiter. We simulate until time t = 7, where the initial condition is a static (no flow) medium, of uniform pressure p = 1/γ throughout (with γ = 5/3). The density is initially ρ = 1.1 inside, and ρ = 1 outside of a circle of radius r = 0.15. To trigger this setup into a thermal runaway process, the energy equation not only has the optically thin ∝ ρ2Λ(T) sink term handled by the Townsend (2009) method, but also adds a special energy source term that balances exactly these radiative losses corresponding to the exterior ρ = 1 , p = 1 /γ settings. A proper implementation where ρ = 1 throughout would hence stay unaltered forever. Since the optically thin losses (and gains) require us to introduce dimensional factors (as Λ(T) requires the temperature T in Kelvin), we introduce units for length Lu = 109 cm, for temperature Tu = 106 K, and for number density nu = 109 cm−3. All other dimensional factors can be derived from these three.

As losses overwhelm the constant heating term within the circle r < 0.15, the setup naturally evolves to a largely spherically symmetric, constantly shrinking central density enhancement. This happens so rapidly that ultimately Rayleigh-Taylor-driven substructures form on the “imploding” density. Time t = 6.45 shown in Fig. 5 typifies this stage of the evolution, where one notices the centrally shrunk density enhancement, and fine structure along its entire edge. Up to this time, our implementation never encountered any faulty negative pressure, so no artificial bootstrapping (briefly discussed in Sect. 8.4) was in effect. However, to get beyond this stage, we did activate an averaging procedure on density-pressure when an isolated grid cell did result in unphysical pressure values below p < 10−14. Doing so, the simulation can be continued up to the stage where a more erratically behaving, highly dynamical and filamentary condensation forms, shown in the right panel of Fig. 5 at t = 7 (see also the accompanying movie). A similar HD transition – due to thermal instability and its radiative runaway – into a highly fragmented, rapidly evolving condensation is discussed in the appendix of Hermans & Keppens (2021), in that case as thermal runaway happens after interacting sound waves damp away due to radiative losses. An ongoing debate (e.g., McCourt et al. 2018; Gronke & Oh 2020) on whether this process is best described as “shattering” versus “splattering,” could perhaps benefit from this simple benchmark test11 to separate possible numerical from physical influences.

4.2 MHD tests and applications

The following three sections illustrate differences due to the choice of the MHD flux scheme (see Table 3) in a 2D ideal MHD shock-cloud setup (Sect. 4.2.1), differences due to varying the magnetic monopole control in a 2D resistive MHD evolution (Sect. 4.2.2), as well as a ID test showcasing ambipolar MHD effects on wave propagation through a stratified magnetized atmosphere (Sect. 4.2.3). We used this test to evaluate the behavior of the various super-time-stepping (STS) strategies available in MPI-AMRVAC for handling specific parabolic source additions. This test also employs the more generic splitting strategy usable in gravitationally stratified settings, also adopted recently in Yadav et al. (2022). We note that the mhd module offers many more possibilities than showcased here: we can, for example, drop the energy evolution equation in favor of an isothermal or polytropic closure, can ask to solve for internal energy density instead of the full (magnetic plus kinetic plus thermal) energy density, and have switches to activate anisotropic thermal conduction, optically thin radiative losses, viscosity, external gravity, as well as Hall and/or ambipolar effects.

4.2.1 Shock-cloud in MHD: Alfvén hits Alfvén

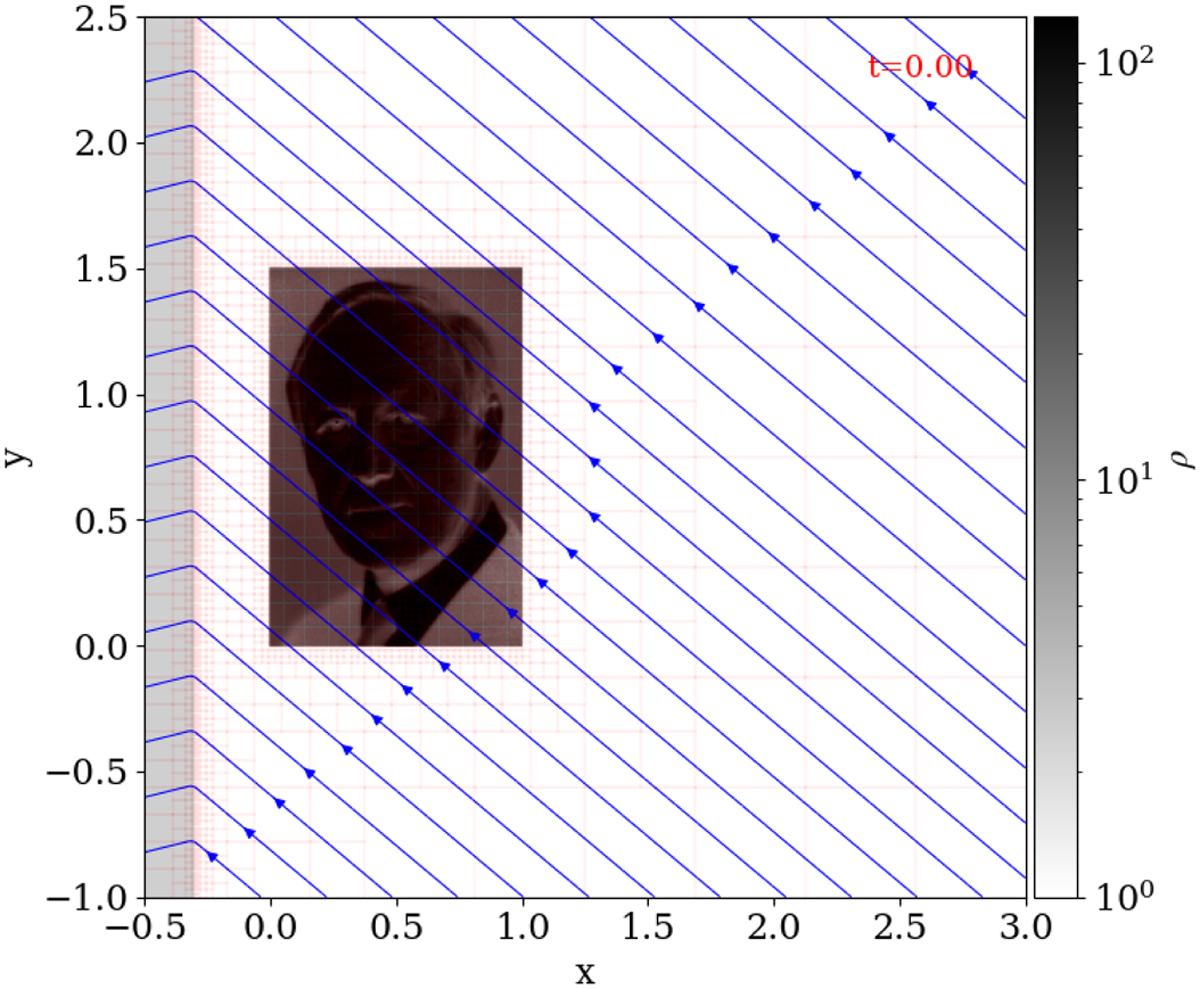

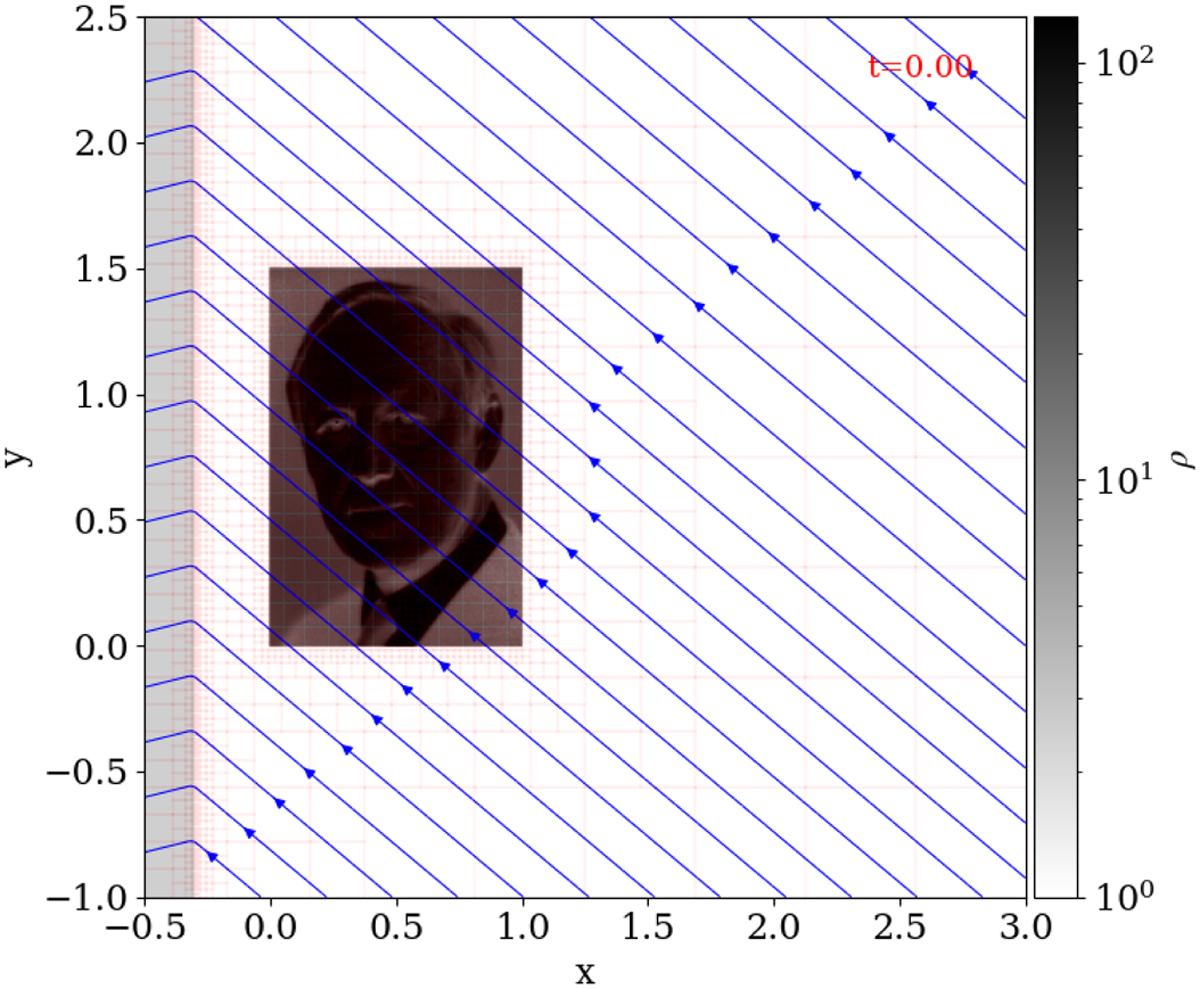

Shock-cloud interactions, where a shock front moves toward and interacts with a prescribed density variation, appear in many standard (M)HD code tests or in actual astrophysical applications. Here, we introduce an MHD shock-cloud interaction where an intermediate (also called Alfvén) shock impacts a cloud region that has a picture of Alfvén himself imprinted on it. This then simultaneously demonstrates how any multidimensional (2D or 3D) setup can initialize certain variables (in this case, the density at t = 0) in a user-selected area of the domain by reading in a separate, structured data set: in this case a vtk-file containing Alfvén’s image as a lookup table12 on a [0,1] × [0,1.5] rectangle. The 2D domain for the MHD setup takes (x, y) ∈ [−0.5,3] × [−1,2.5], and the pre-shock static medium is found where x > −0.3, setting ρ = 1 and p = 1/γ (γ = 5/3). The data read in from the image file are then used to change only the density in the subregion [0, 1] × [0, 1.5] to ρ = 1 + fsI(x, y) where a scale factor fs = 0.5 reduces the image I(x, y) range (containing values between 0 and 256, as usual for image data). We note that the regularly spaced input image values will be properly interpolated to the hierarchical AMR grid, and that this AMR hierarchy auto-adjusts to resolve the image at the highest grid level in use. The square domain is covered by a base grid of size 1282, but with a total of 6 grid levels, we achieve a finest grid cell of size 0.0008545 (to be compared to the 0.002 spacing of the original image).

To realize an Alfvén shock, a shock where the magnetic field lines flip over the shock normal (i.e., the By component changes sign across x = −0.3), we solved for the intermediate speed solution of the shock adiabatic, parametrized by three input values: (1) the compression ratio δ (here quantifying the post-shock density); (2) the plasma beta of the pre-shock region; and (3) the angle between the shock normal and the pre-shock magnetic field. Ideal MHD theory constrains δ ∈ [1, (γ + 1)/(γ − 1)], and these three parameters suffice to then compute the three admissible roots of the shock adiabatic that correspond to slow, intermediate and fast shocks (see, e.g., Gurnett & Bhattacharjee 2017). Selecting the intermediate root of the cubic equation then quantifies the upstream flow speed in the shock frame for a static intermediate shock. Shifting to the frame where the upstream medium is at rest then provides us with values for all post-shock quantities, fully consistent with the prevailing Rankine-Hugoniot conditions. In practice, we took δ = 2.5, an upstream plasma beta 2p/B2 = 0.1, and set the upstream magnetic field using a θ = 40° angle in the pre-shock region, with Bx = −B cos(θ) and By = Β sin(θ). This initial condition is illustrated in Fig. 6, showing the density as well as magnetic field lines. The shock-cloud impact is then simulated in ideal MHD till t = 1. Boundary conditions on all sides use continuous (zero gradient) extrapolation.

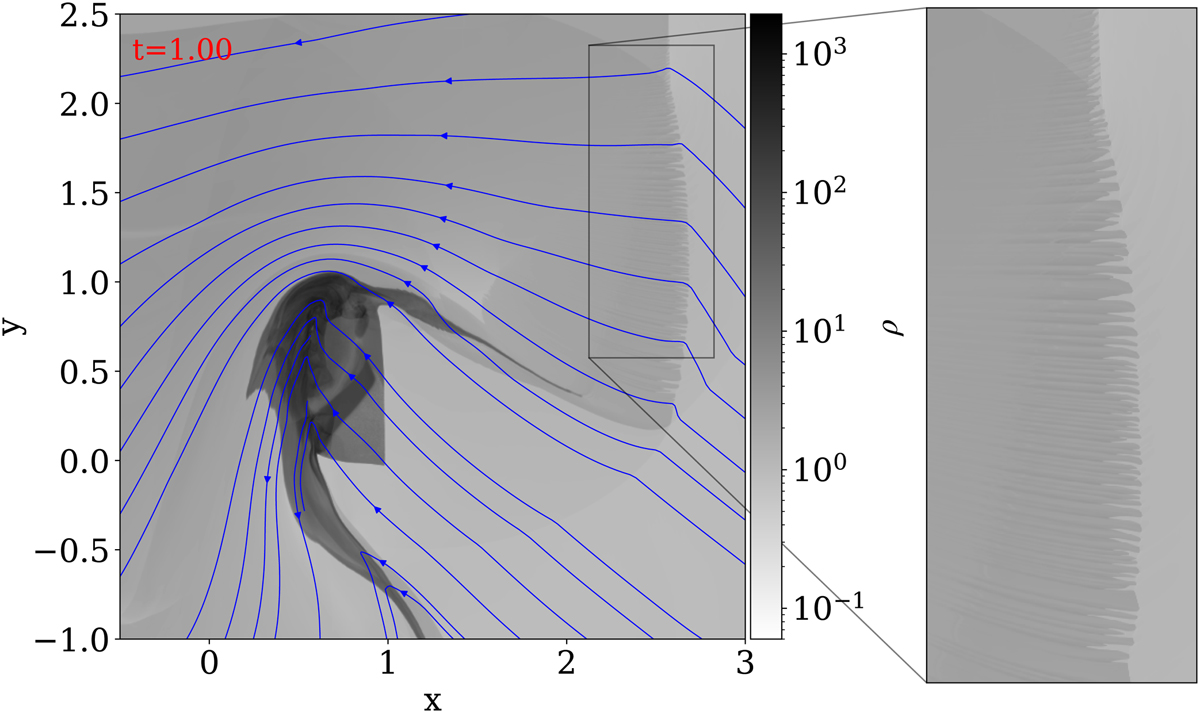

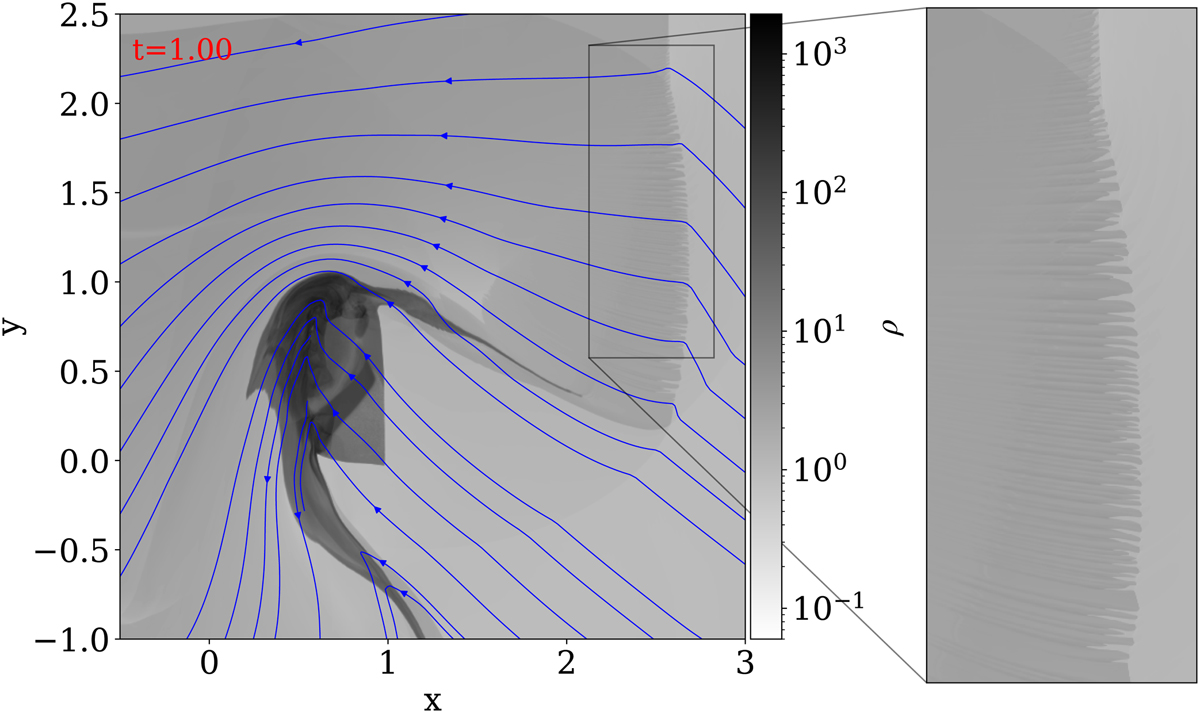

Since there is no actual (analytical) reference solution for this test, we ran a uniform grid case at 81922 resolution (i.e., above the effective 40962 achieved by the AMR settings). Figure 7 shows the density and the magnetic field structure at t = 1, where the HLLD scheme was combined with a constrained transport approach for handling magnetic monopole control. Our implementation of the HLLD solver follows Miyoshi & Kusano (2005) and Guo et al. (2016a), while divergence control strategies are discussed in the next section, Sect. 4.2.2. A noteworthy detail of the setup involves the corrugated appearance of a rightward-moving shock front that relates to a reflected shock front that forms at first impact. It connects to the original rightward moving shock in a triple point still seen for t = 1 at the top right (x, y) ≈ (2.6, 2.2). This density variation, shown also in a zoomed view in Fig. 7, results from a corrugation instability (that develops most notably beyond t = 0.7).

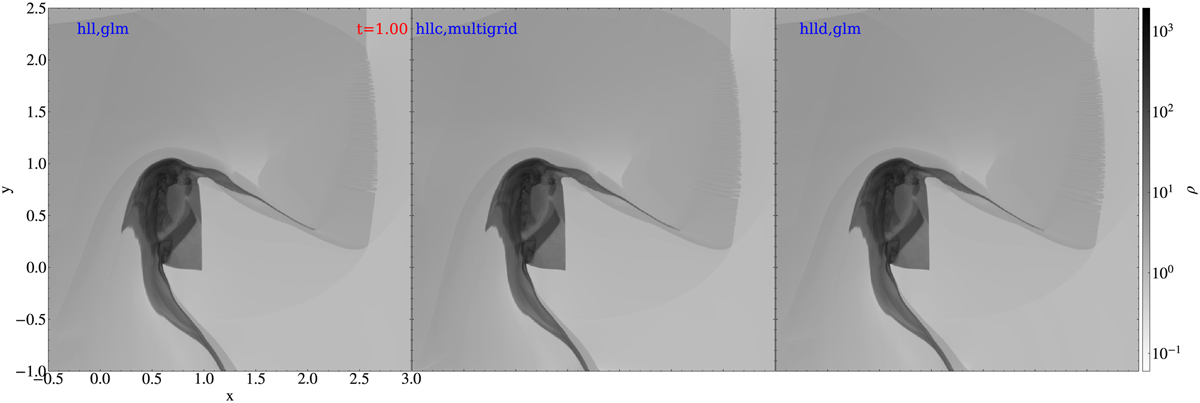

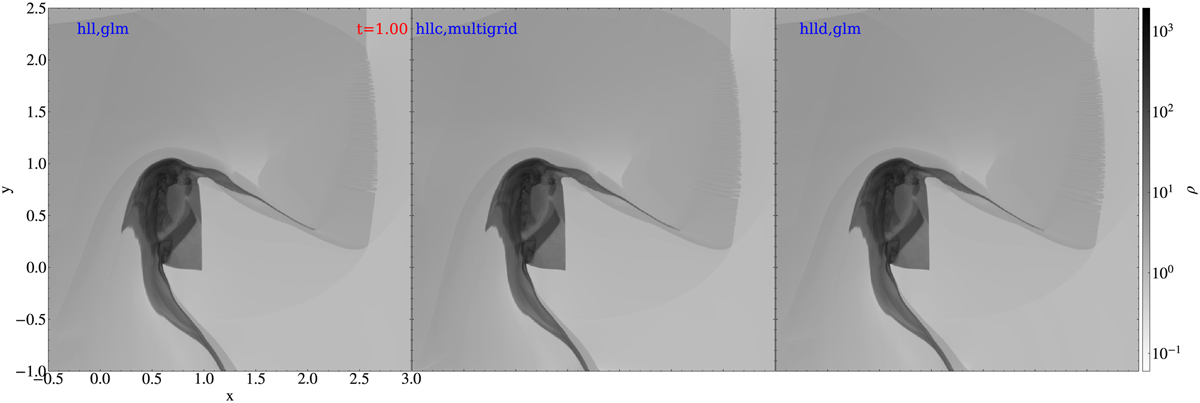

Figure 8 shows the final density distribution obtained with three different combinations of flux schemes, using AMR. We always employed a SSPRK(3,3) three-step explicit time marching with a “koren” limiter (Koren 1993), but varied the flux scheme from HLL, over HHLC, to HLLD. The HLL and HLLD variants used the hyperbolic generalized lagrange multiplier (or “glm”) idea from Dedner et al. (2002), while the HLLC run exploited the recently added multi-grid functionality for elliptic cleaning (see the next section and Teunissen & Keppens 2019). The density views shown in Fig. 8 are consistent with the reference result, and all combinations clearly demonstrate the corrugation of the reflected shock. We note that all the runs shown here did use a bootstrapping strategy (see Sect. 8.4) to recover automatically from local negative pressure occurrences (they occur far into the nonlinear evolution), where we used the averaging approach whenever one encounters a small pressure value below 10−7.

|

Fig. 6 Initial density variation for the 2D MHD Alfvén test: a planar Alfvén shock interacts with a density variation set from Alfvén’s image. The AMR block structure and magnetic field lines are overlaid in red and blue, respectively. |

|

Fig. 7 Reference t = 1 uniform grid result for the Alfvén test using HLLD and constrained transport. The grid is uniform and 8192×8192. We show density and magnetic field lines, zooming in on the corrugated reflected shock at the right. |

|

Fig. 8 Density view of the shock-cloud test, where an intermediate Alfvén shock impacts an “Alfvén” density field. Left: HLL and glm. Middle: HLLC and multigrid. Right: HLLD and glm. Compare this figure to the reference run from Fig. 7. An animation is provided online. |

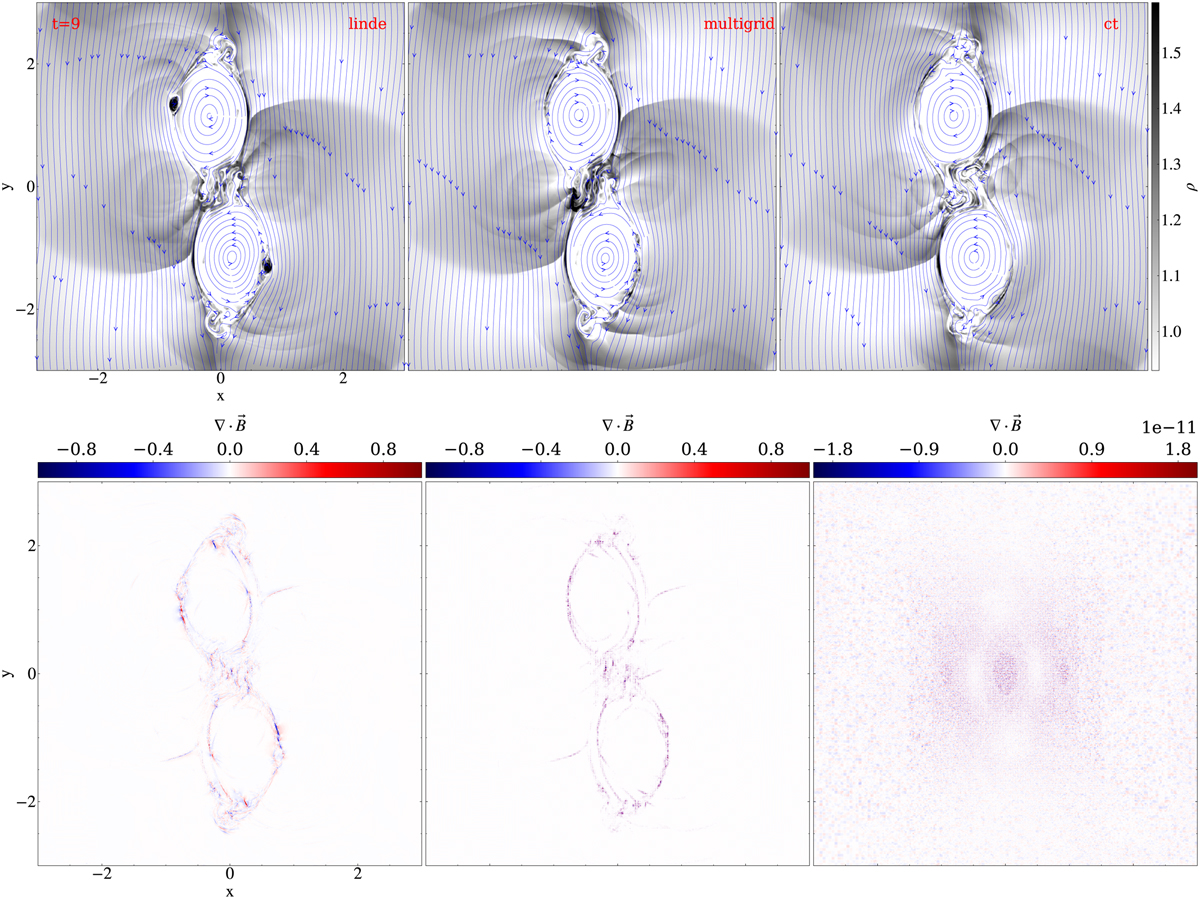

4.2.2 Divergence control in MHD

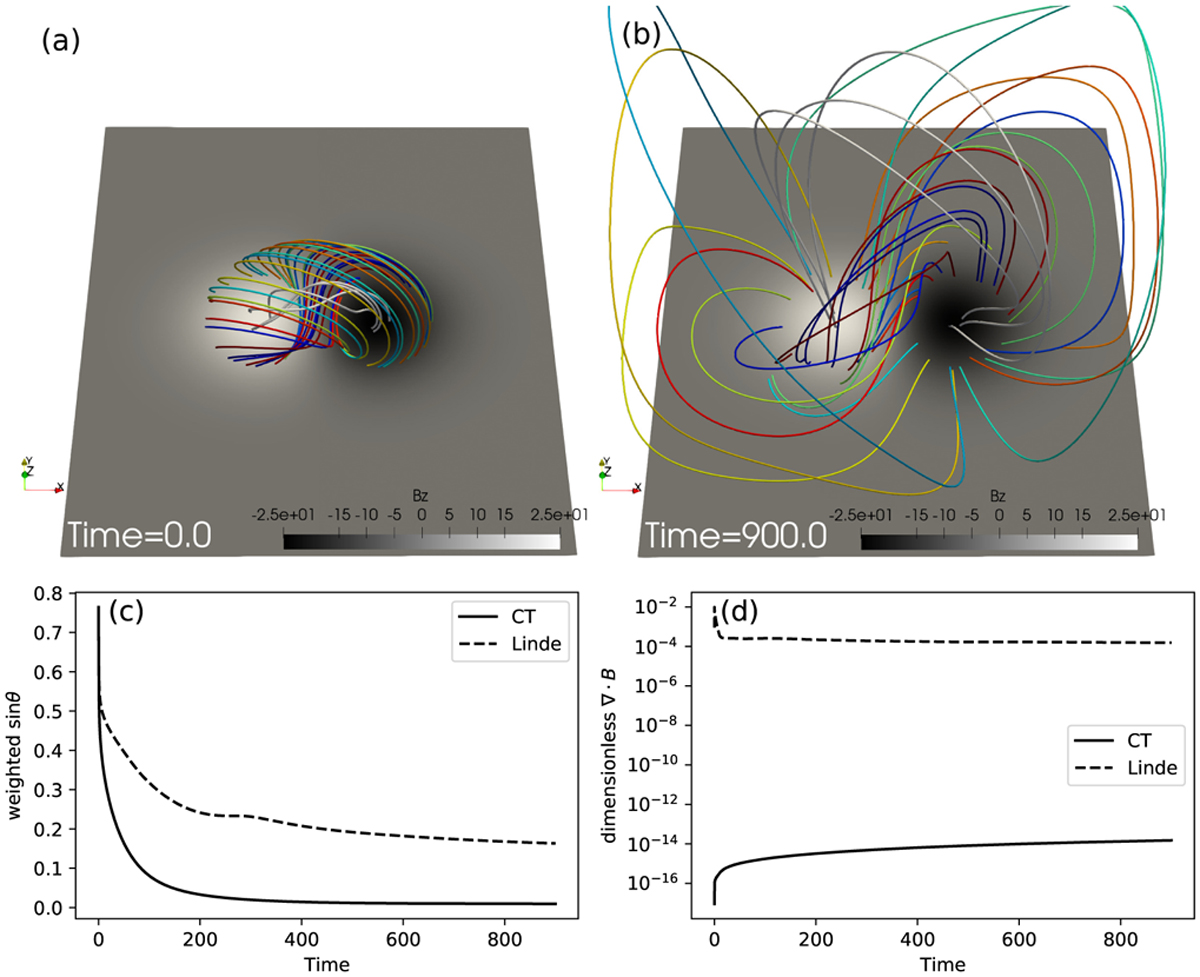

Here, we simulate a 2D resistive MHD evolution that uses a uniform resistivity value η = 0.0001. The simulation exploits a (x, y) ∈ [−3, 3]2 domain, with base resolution 1282 but effective resolution 10242 (4 AMR levels). Always using a five-step SSPRK(5,4) time integration, the HLLC flux scheme, and an “mp5” limiter (Suresh & Huynh 1997), we simulate till t = 9 from an initial condition where an ideal MHD equilibrium is unstable to the ideal tilt instability. We use this test to show different strategies available for discrete magnetic monopole control, and how they lead to overall consistent results in a highly nonlinear, chaotic reconnection regime. This regime was used as a challenging test for different spatial discretizations (finite volume or finite differences) in Keppens et al. (2013), and shown to appear already at ten-fold higher η = 0.001 values.

The initial density is uniform ρ = 1 , while the pressure and magnetic field derive from a vector potential B = ∇ × A(r, θ)ez where (r, θ) denote local polar coordinates. In particular,

where r0 = 3.8317 denotes the first root of the Bessel function of the first kind, J1. This makes the magnetic field potential exterior to the unit circle, but non force-free within. An exact equilibrium where pressure gradient is balanced by Lorentz forces can then take the pressure as the constant value p0 = 1 /γ outside the unit circle, while choosing p = p0 + 0.5[r0A(r, θ)]2 within it. The constant was set to c = 2/(r0 J0(r0)). This setup produces two islands corresponding to antiparallel current systems perpendicular to the simulated plane, which repel. This induces a rotation and separation of the islands whenever a small perturbation is applied: this is due to the ideal tilt instability (also studied in Keppens et al. 2014). A t = 0 small perturbation is achieved by having an incompressible velocity field that follows v = ∇ × ϵ exp(−r2)ez with amplitude ϵ = 10−4.

This test case13 employs a special boundary treatment, where we extrapolate the primitive set of density, velocity components and pressure from the last interior grid cell, while both magnetic field components adopt a zero normal gradient extrapolation (i.e., a discrete formula yi = (−yi+2 + 4yi+1)/3 to fill ghost cells at a minimal edge, i.e., a left or bottom edge, and some analogous formula at maximal edges). This is done along all 4 domain edges (left, right, bottom, top). We note that ghost cells must ultimately contain correspondingly consistent conservative variables (density, momenta, total energy and magnetic field).

We use this test to highlight differences due to the magnetic monopole control strategy, for which MPI-AMRVAC 3.0 offers a choice between ten different options. These are listed in Table 5, along with relevant references. We note that we provide options to mix strategies (e.g., “lindeglm” both diffuses monopole errors in a parabolic fashion and uses an added hyperbolic variable to advect monopoles). There is a vast amount of literature related to handling monopole errors in combination with shock capturing schemes; for example, the seminal contribution by Tóth (2000) discusses this at length for a series of stringent ideal MHD problems. Here, we demonstrate the effect of three different treatments on a resistive MHD evolution where in the far nonlinear regime of the ideal tilt process, secondary tearing events can occur along the edges of the displaced magnetic islands. These edges correspond to extremely thin current concentrations, and the η = 0.0001 value ensures we can get chaotic island formation. We run the setup as explained above with three different strategies, namely “linde,” “multigrid,” and “ct.” The linde strategy was already compared on ideal MHD settings (a standard 2D MHD rotor and Orszag-Tang problem) in Keppens et al. (2003), while the constrained transport strategy is adopted in analogy to its implementation in the related GR-RMHD BHAC code (Olivares et al. 2019) with an additional option of using the contact-mode upwind constrained transport method by Gardiner & Stone (2005). We note that the use of ct requires us to handle the initial condition, as well as the treatment of the special boundary extrapolations, in a staggered-field tailored fashion, to ensure no discrete monopoles are present from initialization or boundary conditions. The multigrid method realizes the elliptic cleaning strategy as mentioned originally in Brackbill & Barnes (1980) on our hierarchical AMR grid. This uses a geometric multigrid solver to handle Poisson’s equation, ∇2ϕ = ∇ · Bbefore, followed by an update Bafter ← Bbefore − ∇ϕ, as described in Teunissen & Keppens (2019).

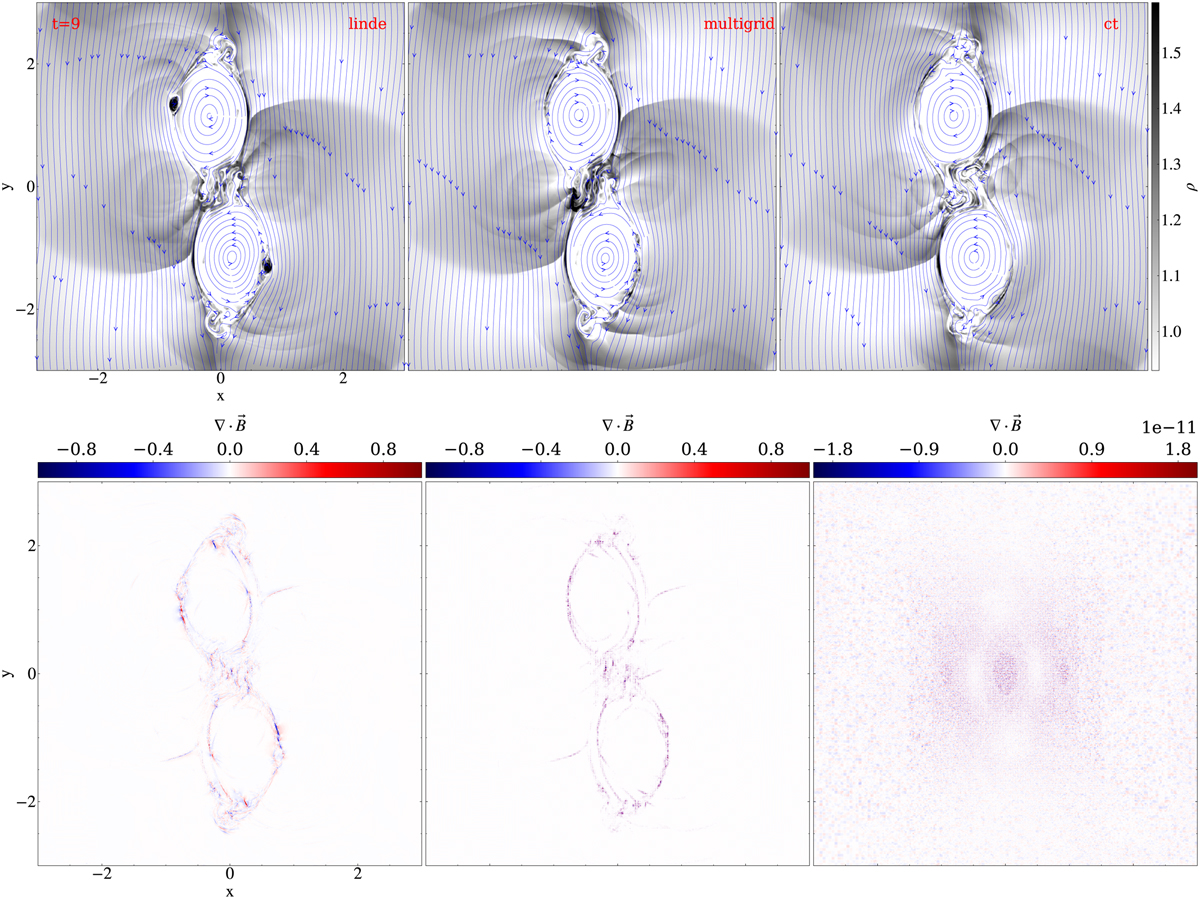

The evolution of the two spontaneously separating islands occurs identically for all three treatments, and it is noteworthy that all runs require no activation of a bootstrap strategy at all (i.e., they always produce positive pressure and density values). We carried out all three simulations up to t = 9, and our end time is shown in Fig. 9. The top panels show the density variations (the density was uniform initially), and one can see many shock fronts associated with the small-scale magnetic islands that appear. Differences between the three runs manifest themselves in where the first secondary islands appear, and how they evolve with time. This indicates how the 10242 effective resolution, combined with the SSPRK(5,4)-HLLD-mp5 strategy still is influenced by numerical discretization errors (numerical “resistivity”), although η = 0.0001. Relevant length scales of interest are the cross-sectional size of the plasmoids obtained, which should be resolved by at least several tens of grid cells. A related study of 2D merging flux tubes showing plasmoid formation (Ripperda et al. 2019b) in resistive, relativistic MHD setting, noted that effective resolutions beyond 80002 were needed to confidently obtain strict convergence at high Lundquist numbers.

We also plot the discrete divergence of the magnetic field in the bottom panels. Obviously, the rightmost ct variant realizes negligible (average absolute values at 10−12−10−11 throughout the entire evolution) divergence in its pre-chosen discrete monopole evaluation. Because of a slow accumulation of roundoff errors due to the divergence-preserving nature of the constrained transport method, this divergence can become larger than machine-precision zero, but remains very low. However, in any other discrete evaluation for the divergence, also the ct run displays monopole errors of similar magnitude and distribution as seen in both leftmost bottom panels of Fig. 9. Indeed, truncation-related monopole errors may approach unity in the thinning current sheets, at island edges, or at shock fronts. We note that the field lines as shown in the top panels have been computed by the code’s field-tracing module discussed in Sect. 7.2.

Table 5Options for ∇ · B control in MPI-AMRVAC 3.0.

|

Fig. 9 Snapshots at time t = 9 for the 2D (resistive) MHD tilt evolution using, from left to right, different magnetic field divergence cleaning methods: linde, multigrid, and ct. First row: Density. The magnetic field lines are overplotted with blue lines, and, as in Fig. 3, they are computed by MPI-AMRVAC by field line tracing (see Sect. 7.2). Second row: Divergence of the magnetic field. An animation is provided online. |

4.2.3 Super-time-stepping and stratification splitting

In a system of PDEs, parabolic terms may impose a very small timestep for an explicit time advance strategy, as Δt ∝ Δx2, according to the CFL condition. In combination with AMR, this can easily become too restrictive. This issue can be overcome in practice by the STS technique, which allows the use of a relatively large (beyond the Δx2 restriction) explicit super-timestep Δts for the parabolic terms, by subdividing Δts into carefully chosen smaller sub-steps. This Δts can, for example, follow from the hyperbolic terms in the PDE alone, when parabolic and hyperbolic updates are handled in a split fashion. Super-time-stepping across Δts involves an s-stage Runge-Kutta scheme, and its number of stages s and the coefficients used in each stage get adjusted to ensure stability and accuracy. With the s-stage Runge-Kutta in a two-term recursive formulation, one can determine the sub-step length by writing the amplification factor for each sub-step as one involving an orthogonal family of polynomials that follow a similar two-term recursion. The free parameters involved can be fixed by matching the Taylor expansion of the solution to the desired accuracy. The use of either Chebyshev or Legendre polynomials gives rise to two STS techniques described in the literature: Runge-Kutta Chebyshev (RKC; Alexiades et al. 1996) and Runge-Kutta Legendre (RKL; Meyer et al. 2014). The second-order-accurate RKL2 variant was demonstrated on stringent anisotropic thermal conduction in multidimensional MHD settings by Meyer et al. (2014), and in MPI-AMRVAC, the same strategy was first used in a 3D prominence formation study (Xia & Keppens 2016) and a 3D coronal rain setup (Xia et al. 2017). We detailed in Xia et al. (2018) how the discretized parabolic term for anisotropic conduction best uses the slope-limited symmetric scheme introduced by Sharma & Hammett (2007), to preserve monotonicity. RKL1 and RKL2 variants are also implemented in Athena++ (Stone et al. 2020). RKC variants were demonstrated on Hall MHD and ambipolar effects by O’Sullivan & Downes (2006, 2007), and used for handling ambipolar diffusion in MHD settings in the codes MANCHA3D (González-Morales et al. 2018) and Bifrost (Nóbrega-Siverio et al. 2020).

The STS method eliminates the timestep restriction of explicit schemes and it is faster than standard sub-cycling. As pointed out in Meyer et al. (2014), compared to the RKC methods, the RKL variant ensures stability during every sub-step (instead of ensuring stability at the end of the super-time-step); have a larger stability region; do not require adjusting the final timestep (roundoff errors) and are more efficient (smaller number of sub-cycles). However, RKL methods require four times more storage compared to the RKC methods.

Meanwhile, both STS methods have been implemented in MPI-AMRVAC 3.0 and could be used for any parabolic source term. We specifically use STS for (anisotropic) thermal conductivity and ambipolar effects in MHD. The strategy can also be used for isotropic HD conduction or in the plasma component of a plasma-neutral setup. There are three splitting strategies to add the parabolic source term in MPI-AMRVAC: before the divergence of fluxes are added (referred to as “before”), after (“after”), or in a split (“split”) manner, meaning that the source is added for half a timestep before and half a timestep after the fluxes.

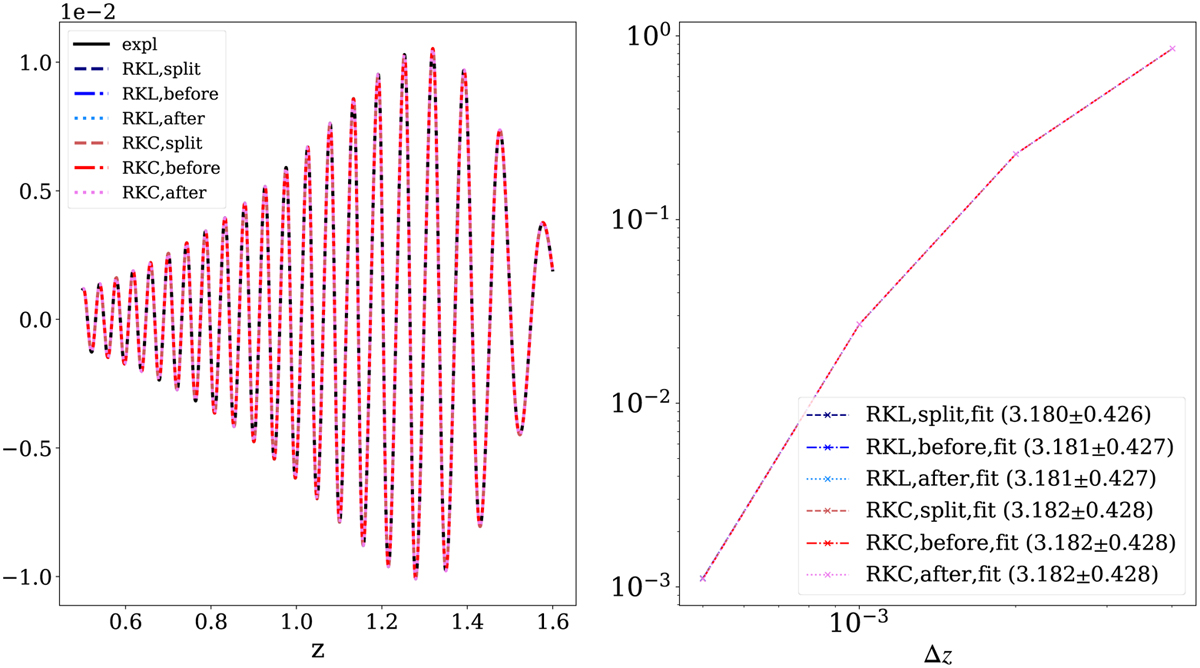

As a demonstration of the now available STS-usage for ambipolar effects, we perform a 1D MHD test of a fast wave traveling upward in a gravitationally stratified atmosphere where partial ionization effects are included through the ambipolar term. Due to this term, such a wave can get damped as it travels up. In the following, we tested both RKC and RKL methods combined with the three strategies before, after, and split for adding the source.

The setup is similar to that employed for a previous study of the ambipolar effect on MHD waves in a 2D setup in Popescu Braileanu & Keppens (2021). The MHD equations solved are for (up to nonlinear) perturbations only, where the variables distinguish between equilibrium (a hydrostatically stratified atmosphere with fixed pressure p0(z) and density ρ0(z)) and perturbed variables, as described in Yadav et al. (2022, see Eqs. (4)–(9)). The geometry adopted is 1.75 D (i.e., all three vector components are included, but only 1D z-variation is allowed). The background magnetic field is horizontal, with a small gradient in the magnetic pressure that balances the gravitational equilibrium. It is important to note that the ambipolar diffusion terms are essential in this test, in order to get wave damping, since a pure MHD variant would see the fast wave amplitude increase, in accord with the background stratification. Ambipolar damping gets more important at higher layers, as the adopted ambipolar diffusion coefficient varies inversely with density-squared. Popescu Braileanu & Keppens (2021) studied cases with varying magnetic field orientation, and made comparisons between simulated wave transformation behavior and approximate local dispersion relations. Here, we use a purely horizontal field and retrieve pure fast mode damping due to ambipolar diffusion.

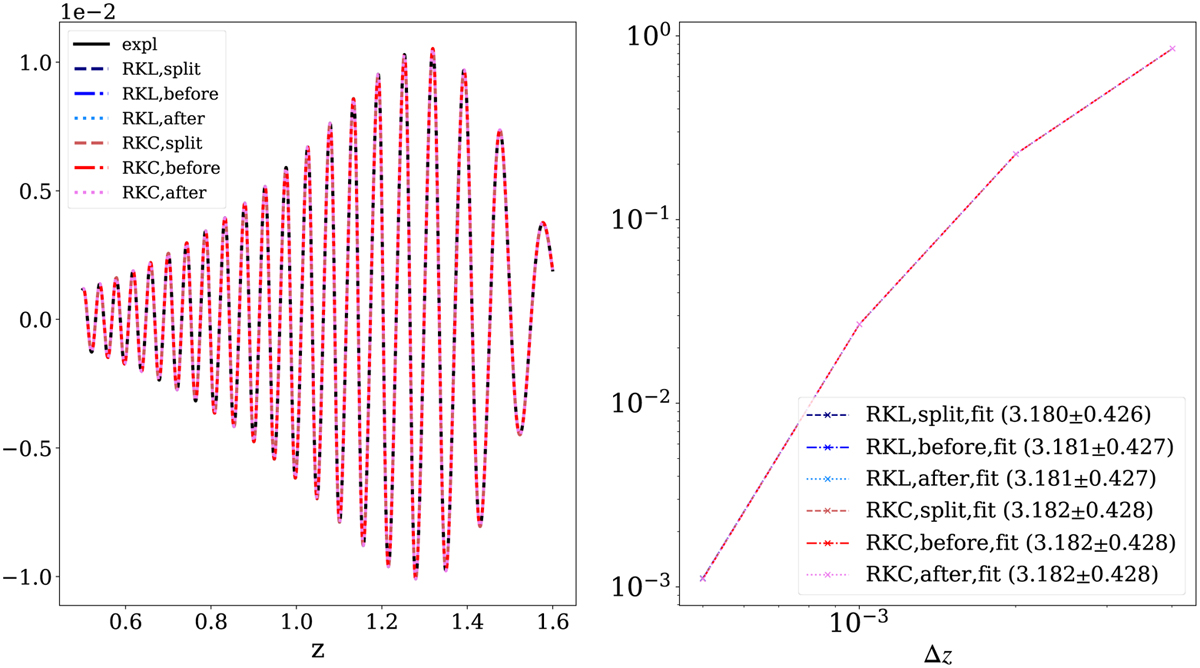

We performed spatio-temporal convergence tests where we ran the simulation using an explicit implementation and 3200 grid points, having this as a reference solution. Left panel in Fig. 10 shows the reference numerical solution in its vertical velocity profile υz(z) at t = 0.7. This panel also over-plots the numerical solution for 3200 points for the six STS cases and we see how the seven solutions overlap. The right panel of Fig. 10 shows the normalized error,

as a function of the cell size Δz = {5 × 10−4, 2.5 × 10−4, 1.25 × 10−4, 6.25 × 10−5}, where u is the numerical solution obtained using STS and r is the reference numerical solution. Then we ran simulations using all six STS combinations using 3200, 1600, 800, and 400 points. We can observe that in all six cases the error curve is the same, and shows an order of convergence larger than 3. We used HLL flux scheme with a cada3 limiter (Čiada & Torrilhon 2009). The temporal scheme was a SSPRK(3,3) three-step explicit time.

Table 6 shows the computational cost of this simulation14, run with the same number of cores using an explicit implementation and the two variants of the STS technique. We can observe that when the STS technique is employed, the computational time drops by a factor of >10, being slightly smaller for RKC.

|

Fig. 10 1.75D ambipolar MHD wave test, where fast waves move upward against gravity. Left: vertical velocity profile for the two STS and three different splitting approaches and for an explicit reference run. Right: normalized error, 6, from Eq. (14) as a function of the cell size, comparing the numerical solution obtained using STS with a reference numerical solution obtained in an explicit implementation. All variants produce nearly identical results, such that all curves seem to be overlapping. |

Table 6Comparison of the computational times of explicit, RKL, and RKC methods (always exploiting eight cores).

5 IMEX variants

The generic idea of IMEX time integrators is to separate off all stiff parts for implicit evaluations, while handling all non-stiff parts using standard explicit time advancement. If we adopt the common (method-of-lines) approach where the spatial discretization is handled independently from the time dimension, we must time-advance equations of the form (15)

(15)

5.1 Multistep IMEX choices

One-step IMEX schemes

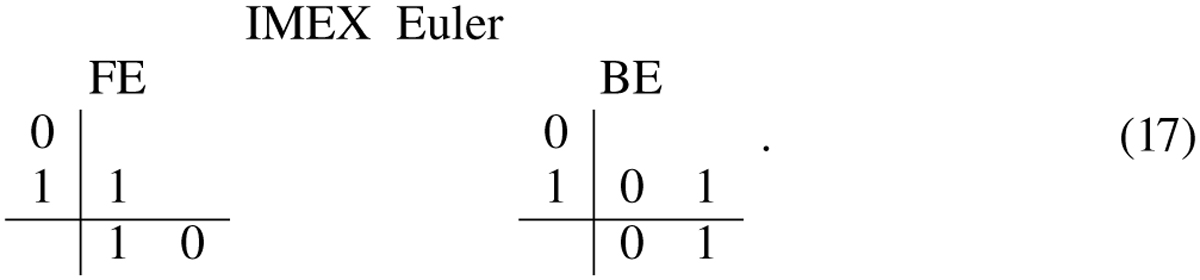

When we combine a first-order, single-step forward Euler (FE) scheme for the explicit part, with a first-order backward Euler (BE) scheme for the implicit part we arrive at an overall first-order accurate scheme, known as the IMEX Euler scheme. We can write the general strategy of this scheme as

and we can denote it by a combination of two Butcher tableaus, as follows:

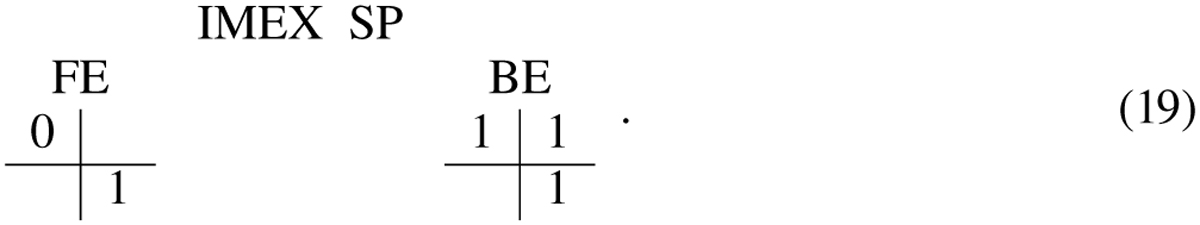

Instead, the IMEX SP combination (with SP denoting a splitting approach), operates as follows: first do an implicit BE step, then perform an explicit FE step, as in

The above two schemes fall under the one-step strategy in MPI-AMRVAC, since only a single explicit advance is needed in each of them.

Two-step IMEX variants

A higher-order accurate IMEX scheme, given in Hundsdorfer & Verwer (2003, Eq. (4.12) of their chapter IV), is a combination of the implicit trapezoidal (or Crank-Nicholson) scheme and the explicit trapezoidal (or Heun) scheme, and writes as

This scheme is known as the IMEX trapezoidal scheme (sometimes denoted as IMEX CN, as it uses an implicit Crank-Nicholson step). Since it involves one implicit stage, and two explicit stages, while achieving second-order accuracy, the IMEX trapezoidal scheme is denoted as an IMEX(1,2,2) scheme. The IMEX Euler and IMEX SP are both IMEX (1,1,1).

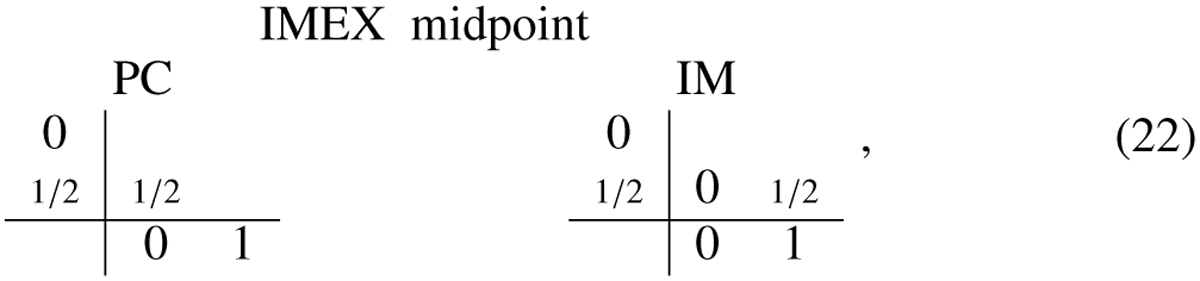

The three IMEX(sim, sex, p) schemes given by Eqs. (16)–(18)–(20) differ in the number of stages used for the implicit (sim) versus explicit (sex) parts, and in the overall order of accuracy p. Both IMEX(1,1,1) first-order schemes from Eqs. (16)–(18) require one explicit stage, and one implicit one. The IMEX(1,2,2) trapezoidal scheme from Eq. (20) has one implicit stage, and two explicit ones. We can design another IMEX(1,2,2) scheme by combining the implicit midpoint scheme with a two-step explicit midpoint or Predictor-Corrector scheme. This yields the following double Butcher tableau,

and corresponds to the second-order IMEX midpoint scheme

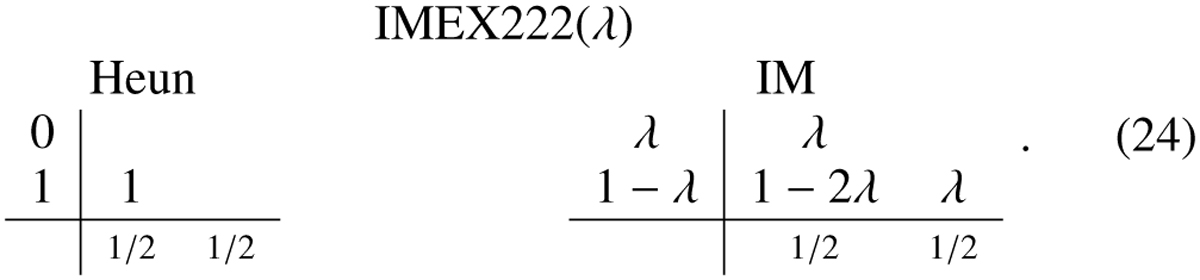

Another variant of a two-step IMEX scheme available in MPI-AMRVAC is known as the IMEX222(λ) scheme from Pareschi & Russo (2005), where a λ parameter can be varied, but the default value  ensures that the scheme is SSP and L-stable (Izzo & Jackiewicz 2017). It has implicit evaluations at fractional steps λ and (1 − λ). Its double Butcher table reads

ensures that the scheme is SSP and L-stable (Izzo & Jackiewicz 2017). It has implicit evaluations at fractional steps λ and (1 − λ). Its double Butcher table reads

Three-step IMEX variants

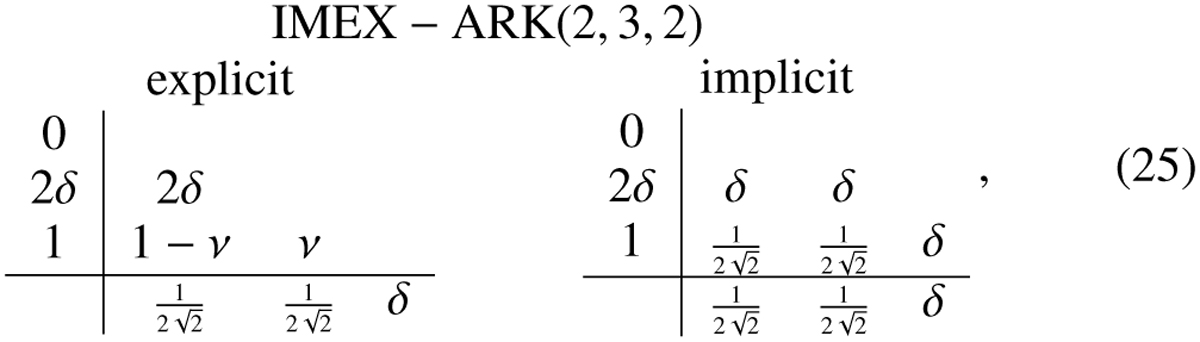

Since we thus far almost exclusively handled Butcher tableaus with everywhere positive entries, we may prefer the IMEX-ARK(2,3,2) scheme (Giraldo et al. 2013), which also has two implicit stages, three explicit stages, at overall second order. It writes as

where we use the fixed values  while

while  .

.

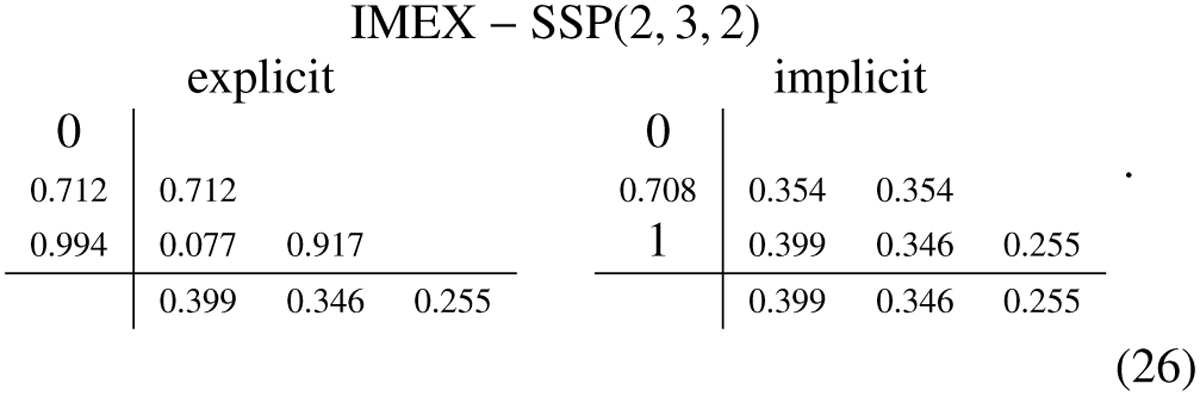

Thus far, in terms of the double Butcher tableaus, we have mostly been combining schemes that have the same left column entries (i.e., sub-step time evaluations) for the implicit and the explicit stages. A possible exception was the FMEX222(λ) scheme. The implicit part was always in diagonally implicit Runge-Kutta type (or DIRK). Since one in practice implements the implicit stages separately from the explicit ones, one can relax the condition for implicit and explicit stages to be at the same time. In Rokhzadi et al. (2018) an IMEX-SSP(2,3,2) scheme with two implicit and three explicit stages was introduced, which indeed relaxes this; it is written as

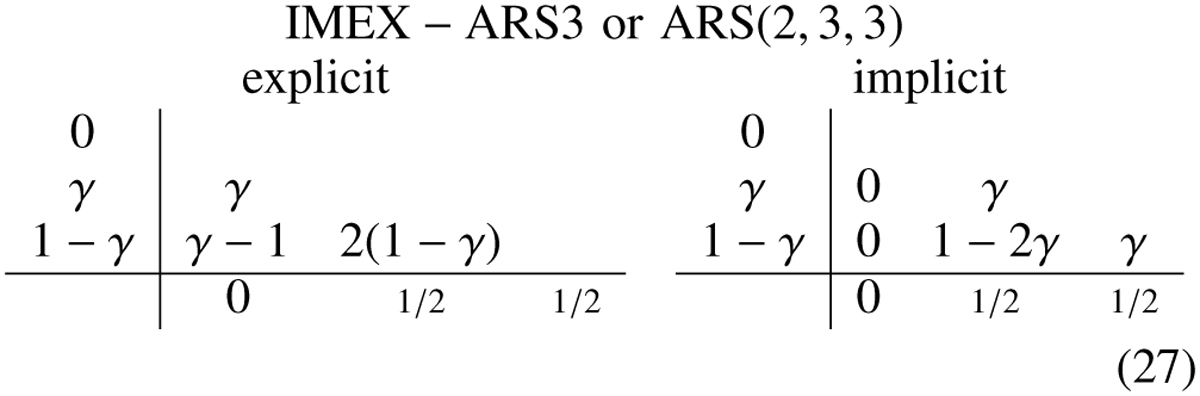

If we allow for tableaus with also negative entries, we may even get third-order IMEX schemes, for example the ARS(2,3,3) scheme by Ascher et al. (1997; also denoted as IMEX-ARS3), where

which uses the fixed value  . This has the advantage of having only two implicit stages, which are usually more costly to compute than explicit stages. This ARS3 (Ascher et al. 1997) scheme has been shown to achieve better than second-order accuracy on some tests (Koto 2008), while needing three explicit and two implicit stages.

. This has the advantage of having only two implicit stages, which are usually more costly to compute than explicit stages. This ARS3 (Ascher et al. 1997) scheme has been shown to achieve better than second-order accuracy on some tests (Koto 2008), while needing three explicit and two implicit stages.

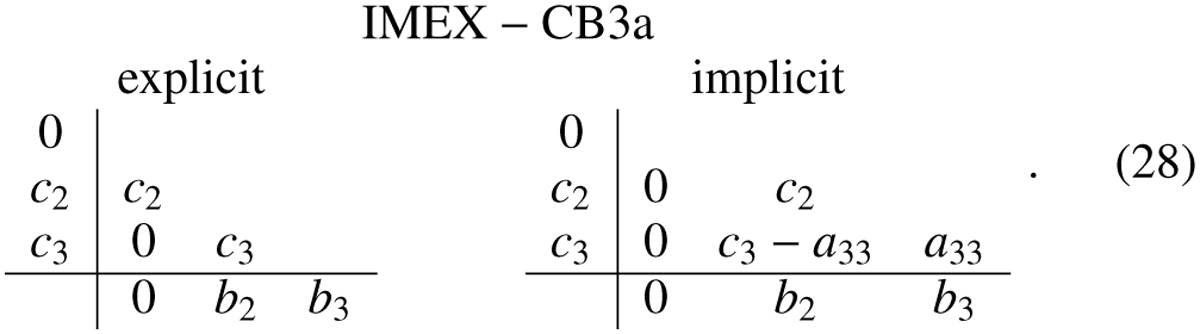

Finally, the IMEX-CB3a scheme, denoted as IMEXRKCB3a in Cavaglieri & Bewley (2015) uses three explicit steps, in combination with two implicit stages to arrive at overall third order, so it is an IMEX(2,3,3) variant:

The following relations fix all the values in its Butcher representation:

This scheme has the advantage that a low storage implementation (using 4 registers) is possible.

5.2 IMEX implementation and usage in MPI-AMRVAC

IMEX implementation

The various IMEX schemes are shared between all physics modules, and a generic implementation strategy uses the following pseudo-code ingredients for its efficient implementation. First, we introduced a subroutine,

which solves the (usually global) problem on the instantaneous AMR grid hierarchy given by (30)

(30)

This call leaves ub unchanged, and returns ua as the solution of this implicit problem. On entry, both states are available at time tn + βΔt. On exit, state ua is advanced by αΔt and has its boundary updated. Second,

just replaces the ua state with its evaluation (at time t) in the implicit part, that is, ua → Fim(t, ua). Finally, any explicit substep is handled by a subroutine,

which advances the ub state explicitly according to (31)

(31)

along with boundary conditions on ub(tb + αΔt).

|

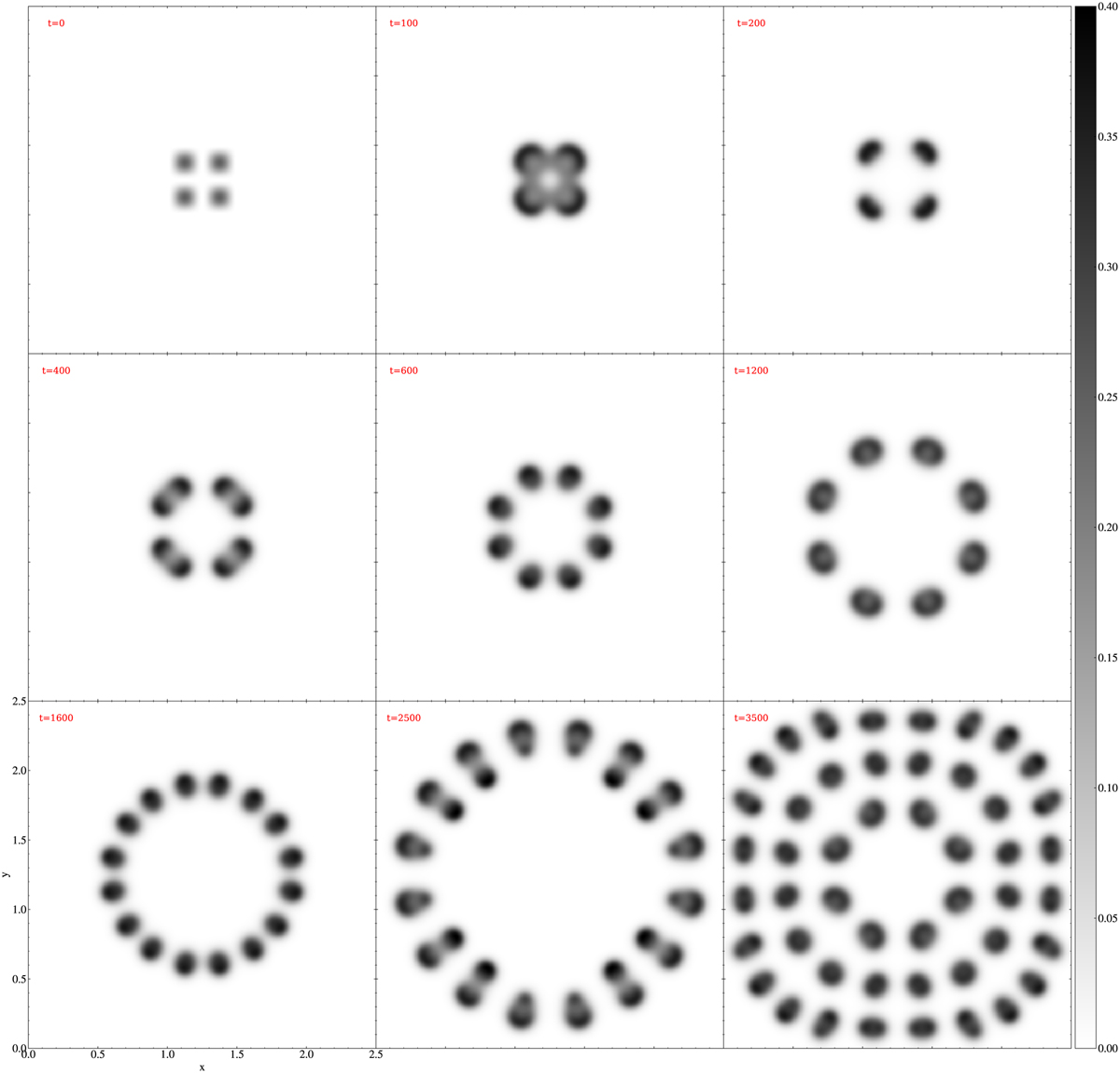

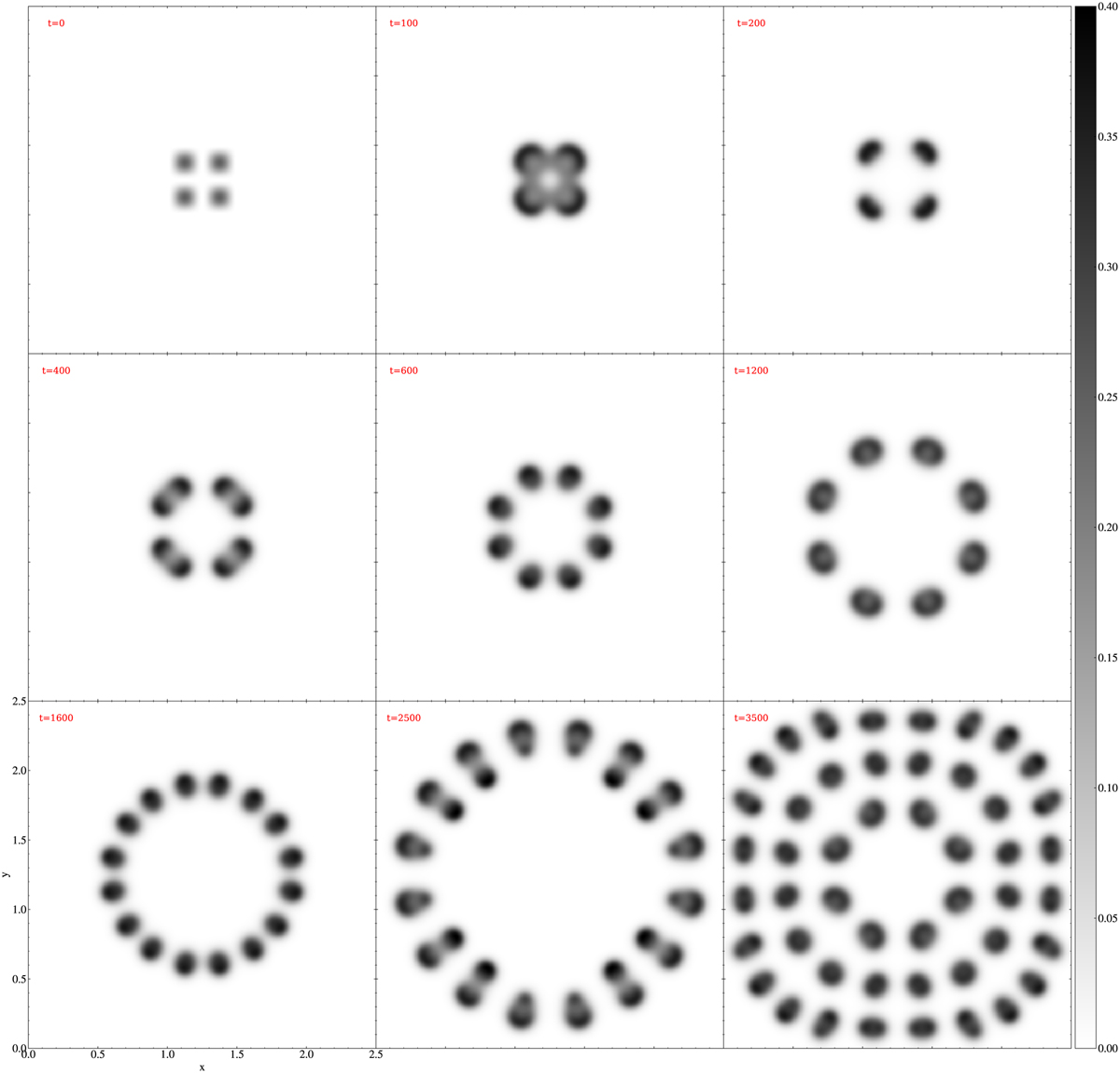

Fig. 11 Temporal evolution of υ(x, y, t) in a pure reaction-diffusion 2D Gray-Scott spot replication simulation. An animation is provided online. |

IMEX usage in MPI-AMRVAC

Currently, the IMEX schemes are used for handling (1) stiff diffusion terms, such as encountered in pure reaction-diffusion (or advection-reaction-diffusion) problems, or in the radiation-hydro module using flux-limited diffusion (FLD; Moens et al. 2022); (2) stiff coupling terms, such as in the gas-dust treatment as explained in Sect. 4.1.2, or in the ion-neutral couplings in the two-fluid module (Popescu Braileanu & Keppens 2022).

As an example of the first (handling stiff diffusion) IMEX use-case, we solve the pure reaction-diffusion 2D Gray-Scott spot replication simulation from Hundsdorfer & Verwer (2003; which also appears in Pearson 1993). The Gray-Scott two-component PDE system for u(x, t) = (u(x, t), υ(x, t)), has the following form: (32)

(32)

where F and k are positive constants; Du and Dυ are constant diffusion coefficients. We note that the feeding term F(1 − u) drives the concentration of u to one, whereas the term −(F + k)υ removes υ from the system. A wide range of patterns can be generated depending on the values of F and k (Pearson 1993), here we take F = 0.024 and k = 0.06. The diffusion coefficients have values D1 = 8 × 10−5 and D2 = 4 × 10−5. The initial conditions consist of a sinusoidal pattern in the center of the domain [0, 2.5]2:

Figure 11 shows the temporal evolution of υ(x, y, t) for a high resolution AMR simulation with a base resolution of 2562 cells. The five levels of refinement allow for a maximal effective resolution of 40962 cells. This long-term, high-resolution run then shows how the AMR quickly adjusts to the self-replicating, more volume-filling pattern that forms: while at t = 100 the coarsest grid occupies a large fraction of 0.859 of the total area, while the finest level covers only the central 0.066 area, this evolves to 0.031 (level 1) and 0.269 (level 5) at time t = 3500, the last time shown in Fig. 11. We note that on a modern 20-CPU desktop [using Intel Xeon Silver 4210 CPU at 2.20GHz], this entire run takes only 1053 s, of which less than 10 percent is spent on generating 36 complete file dumps in both the native .dat format, and the on-the-fly converted .vtu format (suitable for visualization packages such as ParaView or VisIt; see Sect. 8.5).

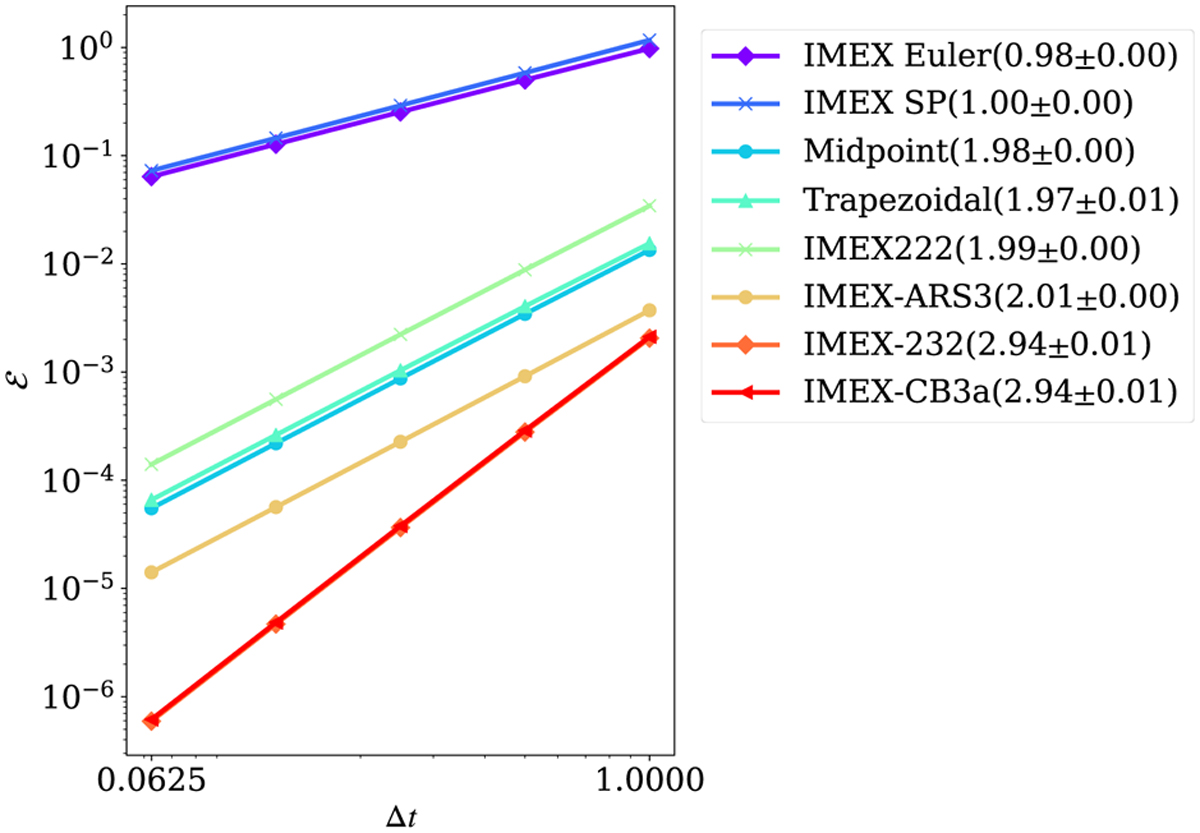

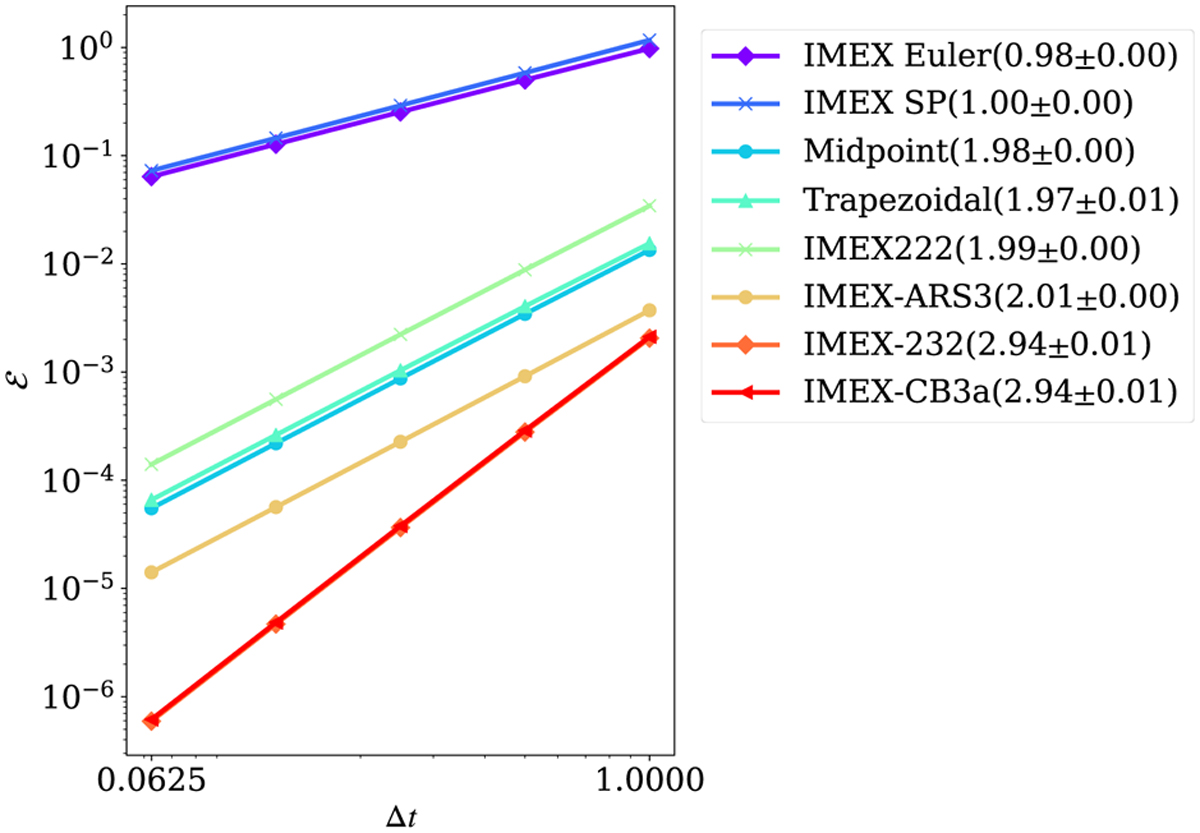

In order to perform a convergence study in time we take the same setup as Fig. 11, but for a uniform grid of 2562 cells (corresponding to a cell size of ≈0.012) and a final time tend = 100 (corresponding to the second panel of Fig. 11). We compare every simulation to a reference solution obtained with a classical fourth-order explicit Runge-Kutta scheme for Δt = 10−3. When explicitly solving the reaction-diffusion system corresponding to the initial conditions, the explicit timesteps are Δtd,expl = 0.149 and Δtr, expl = 5.803 associated with diffusion and the reaction terms, respectively. Hence, the use of the IMEX schemes reduces the computational cost by a factor of Δtr,expl /Δtd,expl ≈ 39 for this particular problem. For the convergence tests themselves the value of the largest timestep is fixed to unity, followed by four successive timesteps smaller by a factor of 2. The resulting convergence graph for Δt ∈ {0.0625, 0.125, 0.25, 0.5,1} is shown in Fig. 12, showing good correspondence between the theoretical and observed convergence rates.

We note that in this Gray-Scott problem15, the implicit update from Eq. (30) is actually a problem that can be recast to the following form:

and similarly for υ. For solving such generic elliptic problems on our AMR grid, we exploit the efficient algebraic multigrid solver as introduced in Teunissen & Keppens (2019).

|

Fig. 12 Temporal convergence of the IMEX Runge-Kutta schemes in MPI-AMRVAC. The error computed as  , where N is the total number of grid points, is plotted as a function of the timestep used to obtain the numerical solutions u and υ using IMEX schemes. The uref and υref are the reference numerical solutions obtained using an explicit scheme with a much smaller timestep. , where N is the total number of grid points, is plotted as a function of the timestep used to obtain the numerical solutions u and υ using IMEX schemes. The uref and υref are the reference numerical solutions obtained using an explicit scheme with a much smaller timestep. |

6 Special (solar) physics modules

In recent years, MPI-AMRVAC has been actively applied to solar physics, where 3D MHD simulations are standard, although they may meet very particular challenges. Even when restricting attention to the solar atmosphere (photosphere to corona), handling the extreme variations in thermodynamic quantities (density, pressure and temperature) in combination with strong magnetic field concentrations, already implies large differences in plasma beta. Moreover, a proper handling of the chromo-spheric layers, along with the rapid temperature rise in a narrow transition region, really forces one to use advanced radiative-MHD treatments (accounting for frequency-dependent, nonlocal couplings between radiation and matter, true nonlocal-thermal-equilibrium physics affecting spectral line emission or absorption, etc.). Thus far, all these aspects are only handled approximately, with, for example, the recently added plasma-neutral src/twofl module (Popescu Braileanu & Keppens 2022) as an example where the intrinsically varying degree of ionization throughout the atmosphere can already be incorporated. To deal with large variations in plasma beta, we provided options to split off a time-independent (not necessarily potential) magnetic field B0 in up to resistive MHD settings (Xia et al. 2018), meanwhile generalized (Yadav et al. 2022) to split off entire 3D magneto-static force-balanced states −∇p0 + ρ0g + J0 × B0 = 0. For MHD and two-fluid modules, we add an option to solve internal energy equation instead of total energy equation to avoid negative pressure when plasma beta is extremely small.

A specific development relates to numerically handling energy and mass fluxes across the sharp transition region variation, which under typical solar conditions and traditional Spitzertype thermal conductivities can never be resolved accurately in multidimensional settings. Suitably modifying the thermal conduction and radiative loss prescriptions can preserve physically correct total radiative losses and heating aspects (Johnston et al. 2020). This led to the transition-region-adaptive-conduction (TRAC) approaches (Johnston et al. 2020, 2021; Iijima & Imada 2021; Zhou et al. 2021), with, for example, Zhou et al. (2021) introducing various flavors where the field line tracing functionality (from Sect. 7.2) was used to extend the originally 1D hydro incarnations to multidimensional MHD settings. Meanwhile, truly local variants (Iijima & Imada 2021; Johnston et al. 2021) emerged, and MPI-AMRVAC 3.0 provides multiple options16 collected in src/mhd/mod_trac.t. In practice, up to 7 variants of the TRAC method can be distinguished in higher dimensional (> 1D) setups, including the use of a globally fixed cut-off temperature, the (masked and unmasked) multidimensional TRACL and TRACB methods introduced in Zhou et al. (2021), or the local fix according to Iijima & Imada (2021).

Various modules are available that implement frequently recurring ingredients in solar applications. These are, for example: a potential-field-source-surface (PFSS) solution on a 3D spherical grid that extrapolates magnetic fields from a given bottom magnetogram (see src/physics/mod_pfss.t as evaluated in Porth et al. 2014), a method for extrapolating a magnetogram into a linear force-free field in a 3D Cartesian box (see src/physics/mod_lfff.t), or a modular implementation of the frequently employed 3D Titov-Démoulin (Titov & Démoulin 1999) analytic flux rope model (see src/physics/mod_tdfluxrope.t), or the functionality to perform nonlinear force-free field extrapolations from vector magnetograms (see src/physics/mod_magnetofriction.t as evaluated in Guo et al. 2016b,c).

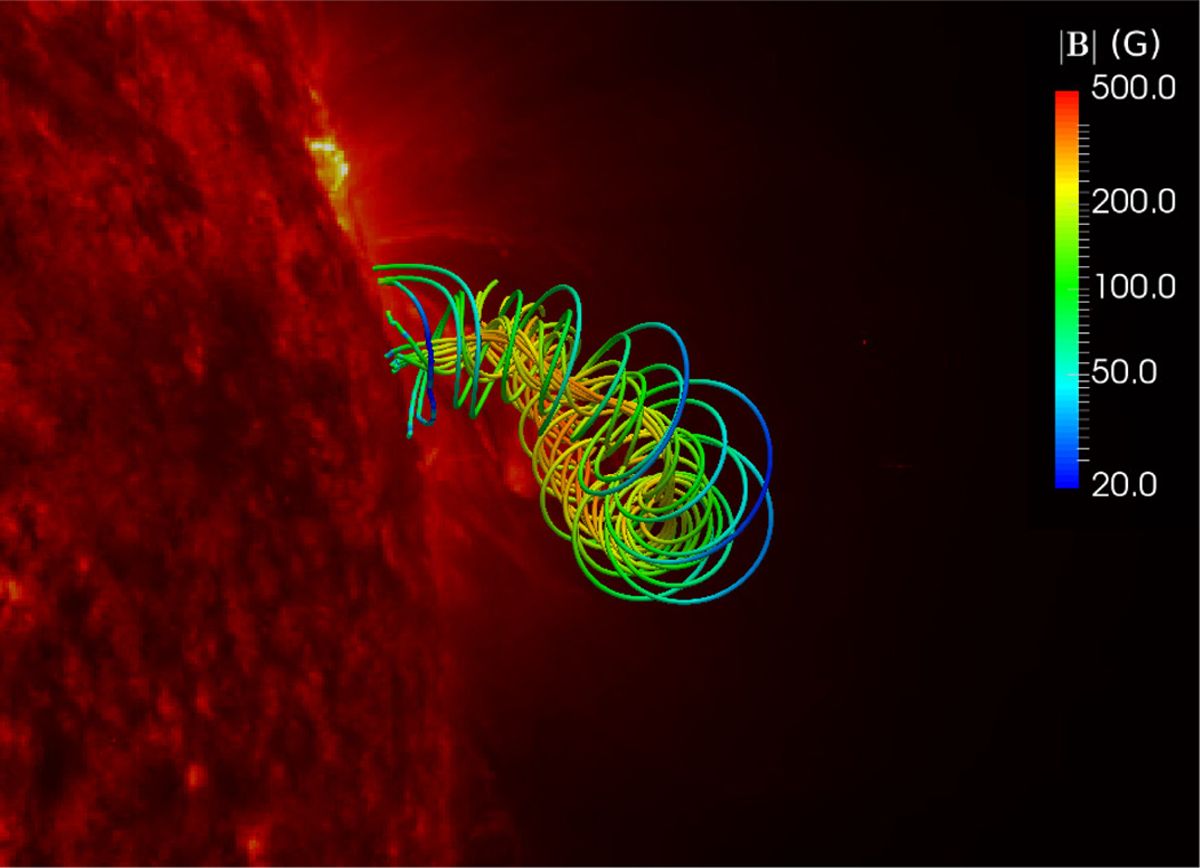

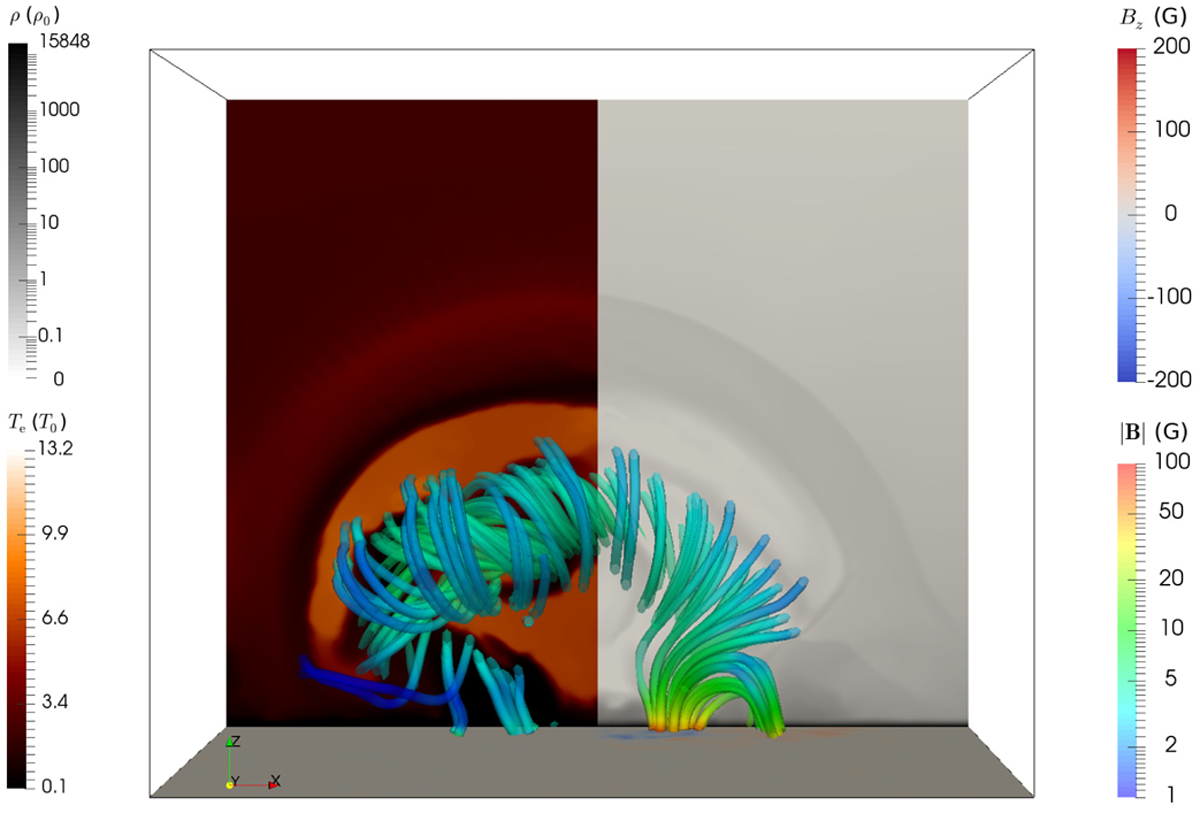

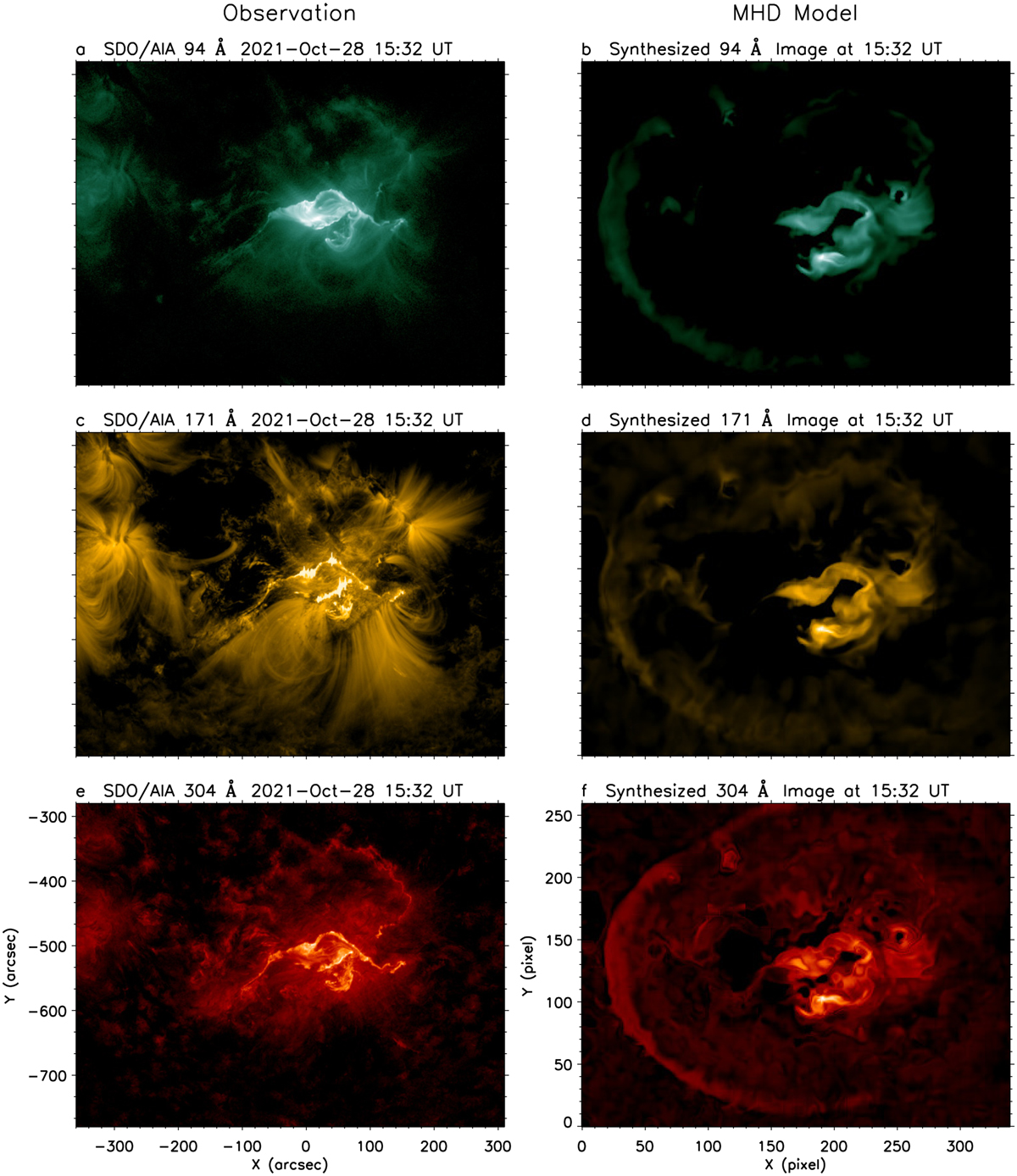

In what follows, we demonstrate more recently added solarrelevant functionality, namely the addition of a time-dependent magneto-frictional (MF) module in Sect. 6.1, the possibility to insert flux ropes using the regularized Biot-Savart laws (RBSLs) from Titov et al. (2018) in Sect. 6.2, and the way to synthesize 3D MHD data to actual extreme ultraviolet (EUV) images in a simple on-the-fly fashion in Sect. 6.3.

6.1 Magneto-frictional module

The MF method is closely related to the MHD relaxation process (e.g., Chodura & Schlueter 1981). It is proposed by Yang et al. (1986) and considers both the momentum equation and the magnetic induction equation:

where ρ is the density, v the velocity, J = ∇ × B/μ0 the electric current density, B the magnetic field, p the pressure, g the gravitational field, ν the friction coefficient, and μ0 the vacuum permeability. To construct a steady-state force-free magnetic field configuration, the inertial, pressure-gradient, and gravitational forces are omitted in Eq. (35) and one only uses the simplified momentum equation to give the MF velocity:

Equations (36) and (37) are then combined together to relax an initially finite-force magnetic field to a force-free state where J × B = 0 with appropriate boundary conditions.

The MF method has been adopted to derive force-free magnetic fields in 3D domains (e.g., Roumeliotis 1996; Valori et al. 2005; Guo et al. 2016b,c). It is commonly regarded as an iteration process to relax an initial magnetic field that does not need to have an obvious physical meaning. For example, if the initial state is provided by an extrapolated potential field together with an observed vector magnetic field at the bottom boundary, the horizontal field components can jump discontinuously there initially, and locally are probably not in a divergence-free condition. The MF method can still relax this unphysical initial state to an almost force-free and divergence-free state (the degree of force-freeness and its solenoidal character can be quantified during the iterates and monitored). On the other hand, the MF method could also be used to actually mimic a time-dependent process (e.g., Yeates et al. 2008; Cheung & DeRosa 2012), although there are caveats about using the MF method in this way (Low 2013). The advantage of such a time-dependent MF method is that it consumes much less computational resources than a full MHD simulation to cover a long-term quasi-static evolution of nearly force-free magnetic fields. This allows us to simulate the global solar coronal magnetic field over a very long period, for instance, several months or even years.

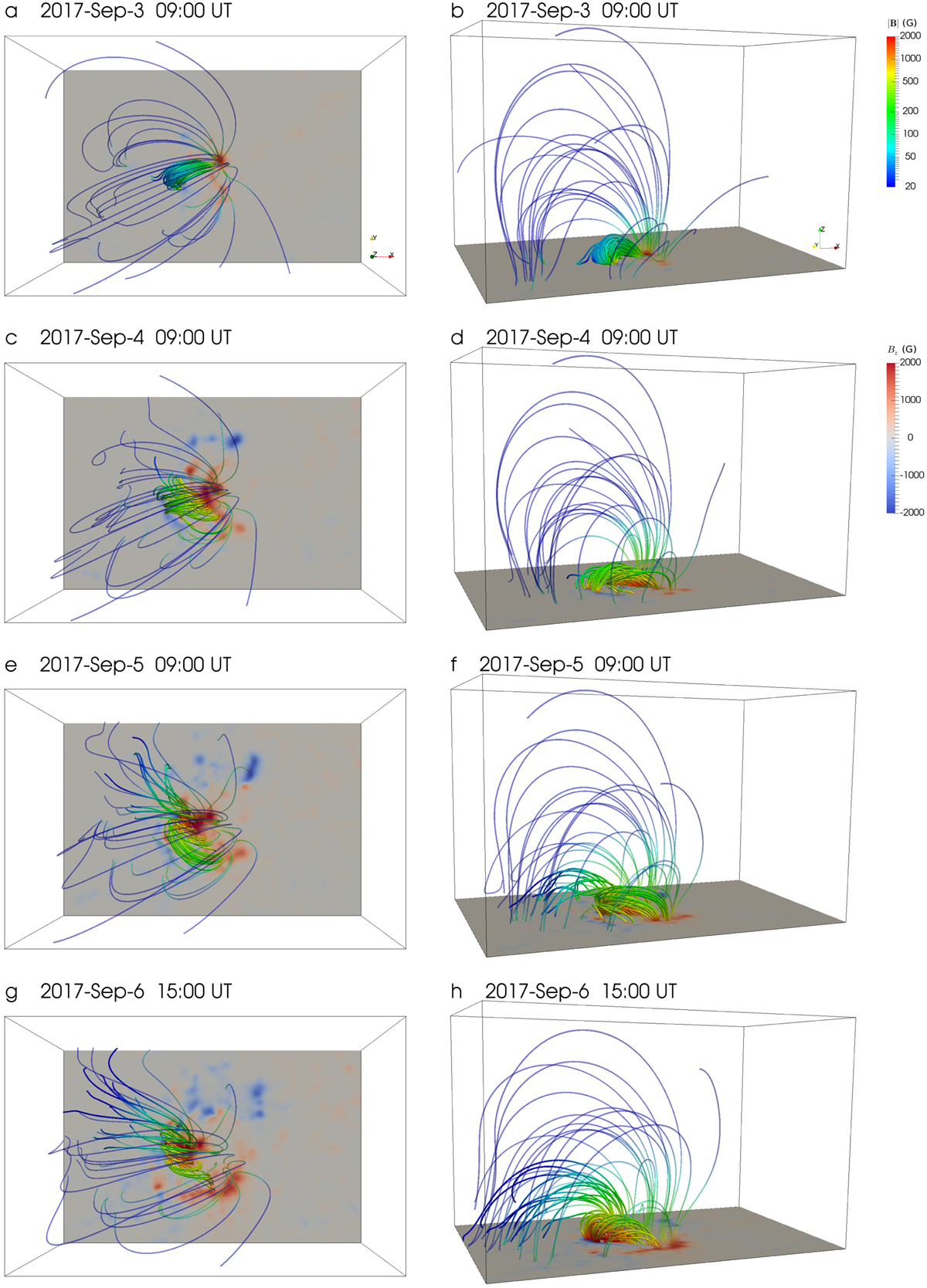

We implemented a new MF module (src/mf), parallel to the existing physics modules like MHD, in MPI-AMRVAC. This module can be used in 2D and 3D, and in different geometries, fully compatible with (possibly stretched) block-AMR. We set the friction coefficient ν = ν0B2, where ν0 = 10−15 s cm−2 is the default value. The magnitude of the MF velocity is smoothly truncated to an upper limit υmax = 30 km s−1 by default to avoid extremely large numerical speed near magnetic null points (Pomoell et al. 2019). The ν0 and υmax are input parameters mf_nu and mf_vmax with dimensions. We allow a smooth decay of the MF velocity toward the physical boundaries to match line-tied quasi-static boundaries (Cheung & DeRosa 2012). In contrast to the previous MF module (still available as src/physics/mod_magnetofriction.t) used in Guo et al. (2016c), this new MF module in src/mf includes the time-dependent MF method and now fully utilizes the framework for data I/O with many more options of numerical schemes. Especially, the constrained transport scheme (Balsara & Spicer 1999), compatibly implemented with the staggered AMR mesh (Olivares et al. 2019), to solve the induction equation, Eq. (36), is recommended when using this new MF module. This then can enforce the divergence of magnetic field to near machine precision zero.

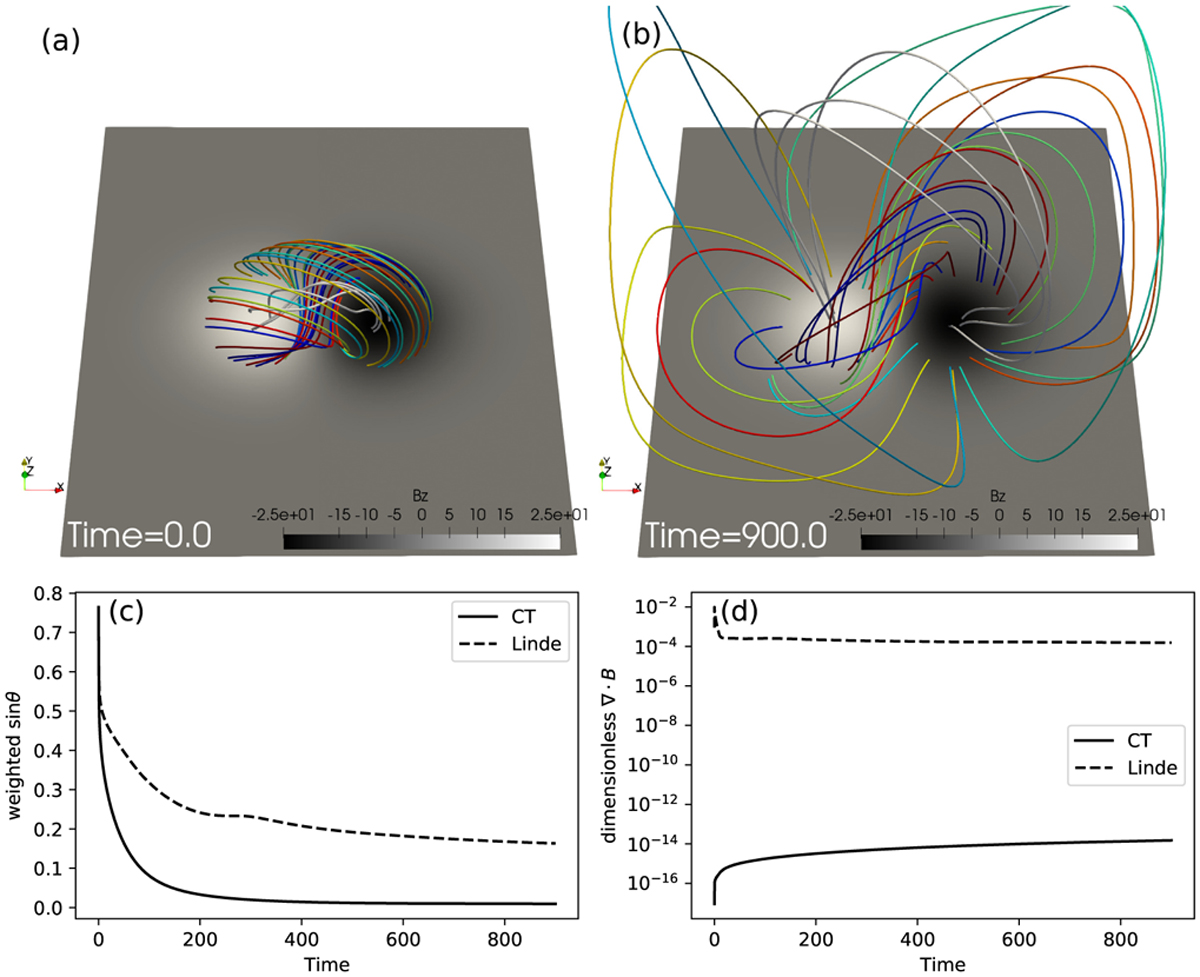

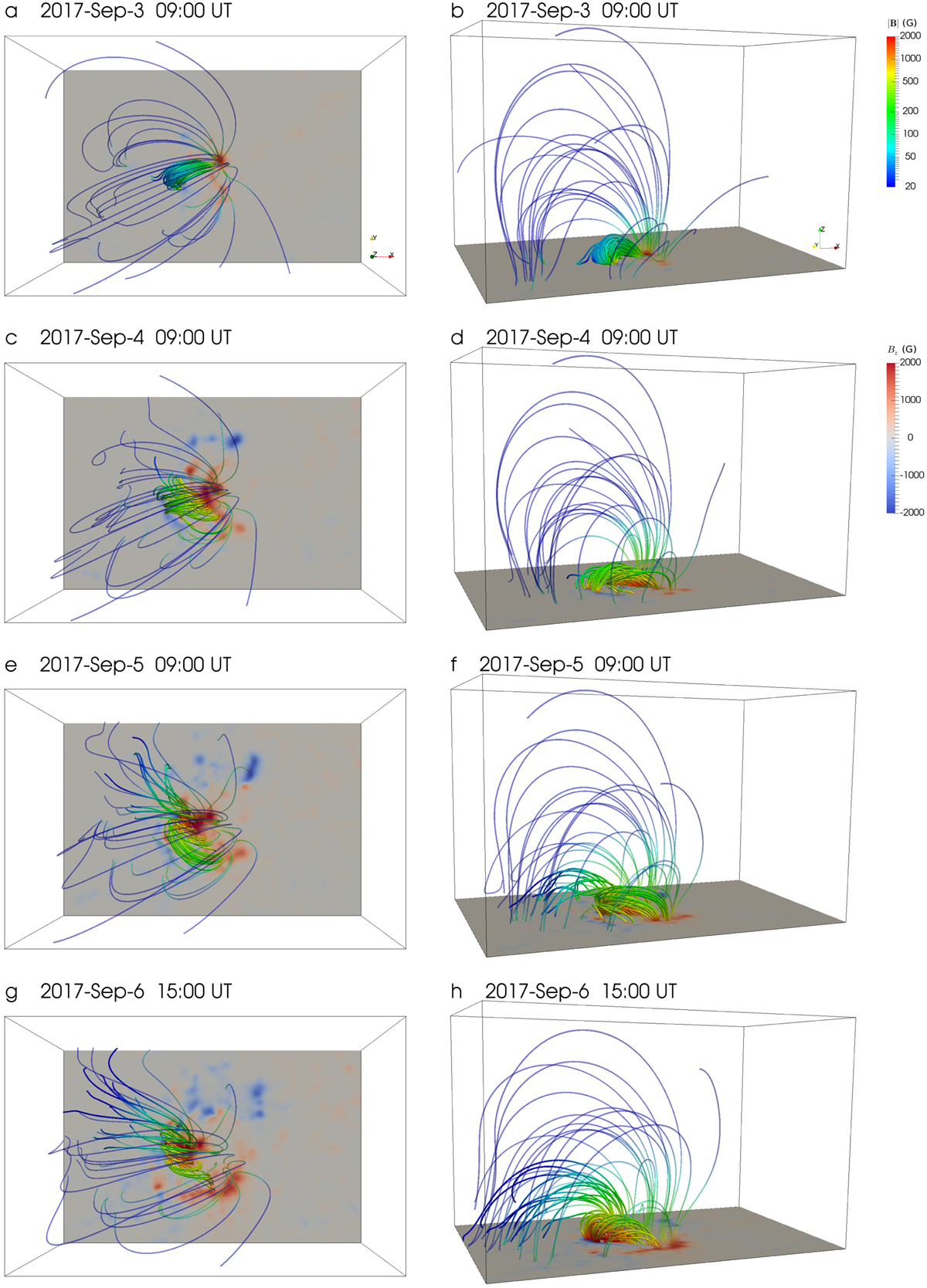

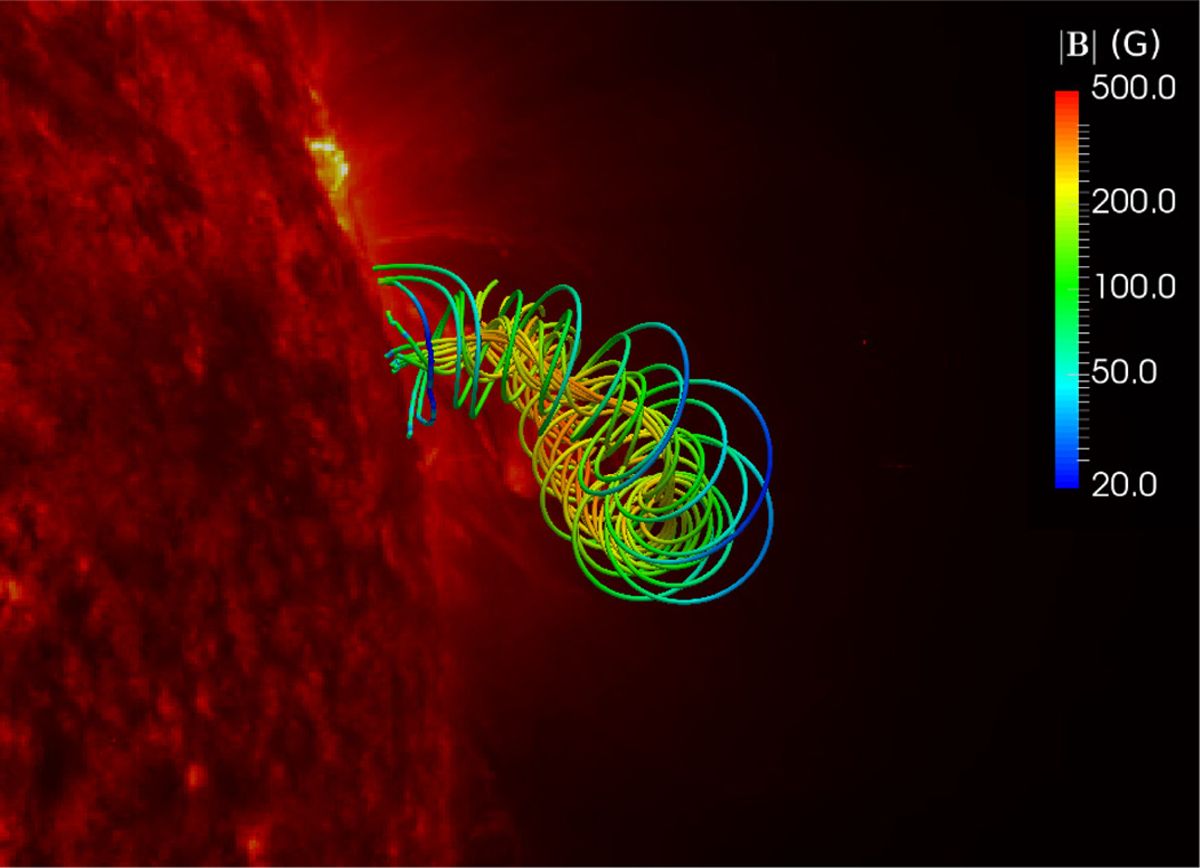

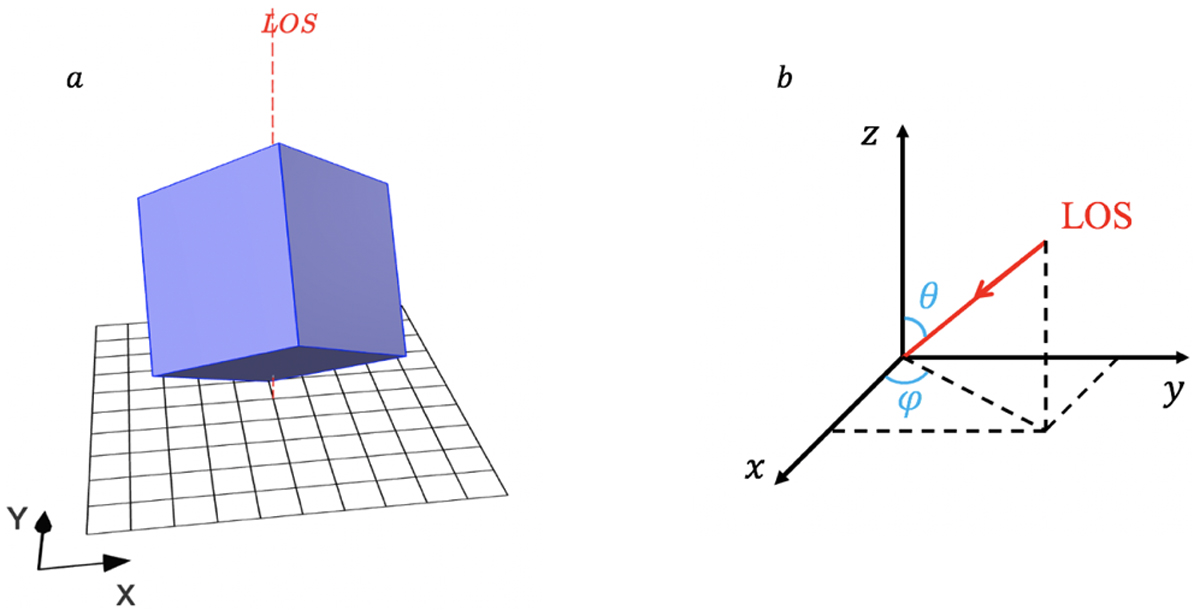

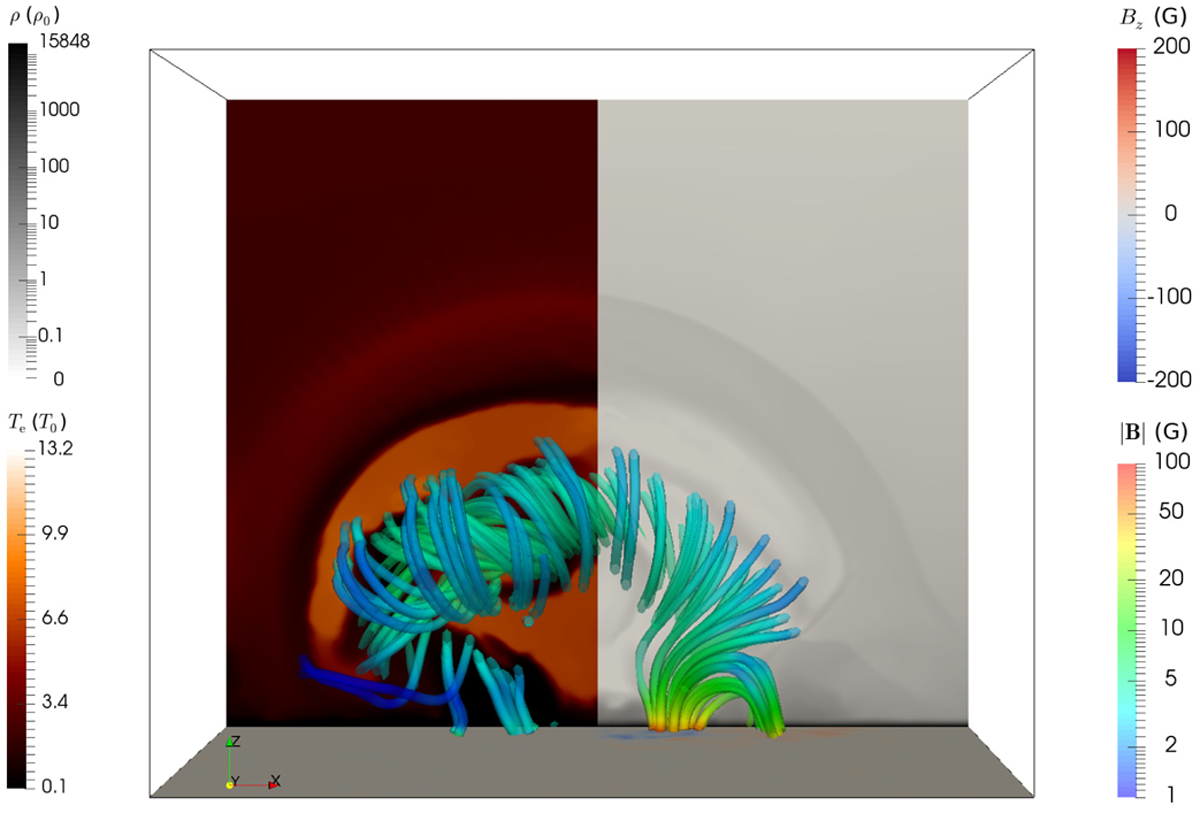

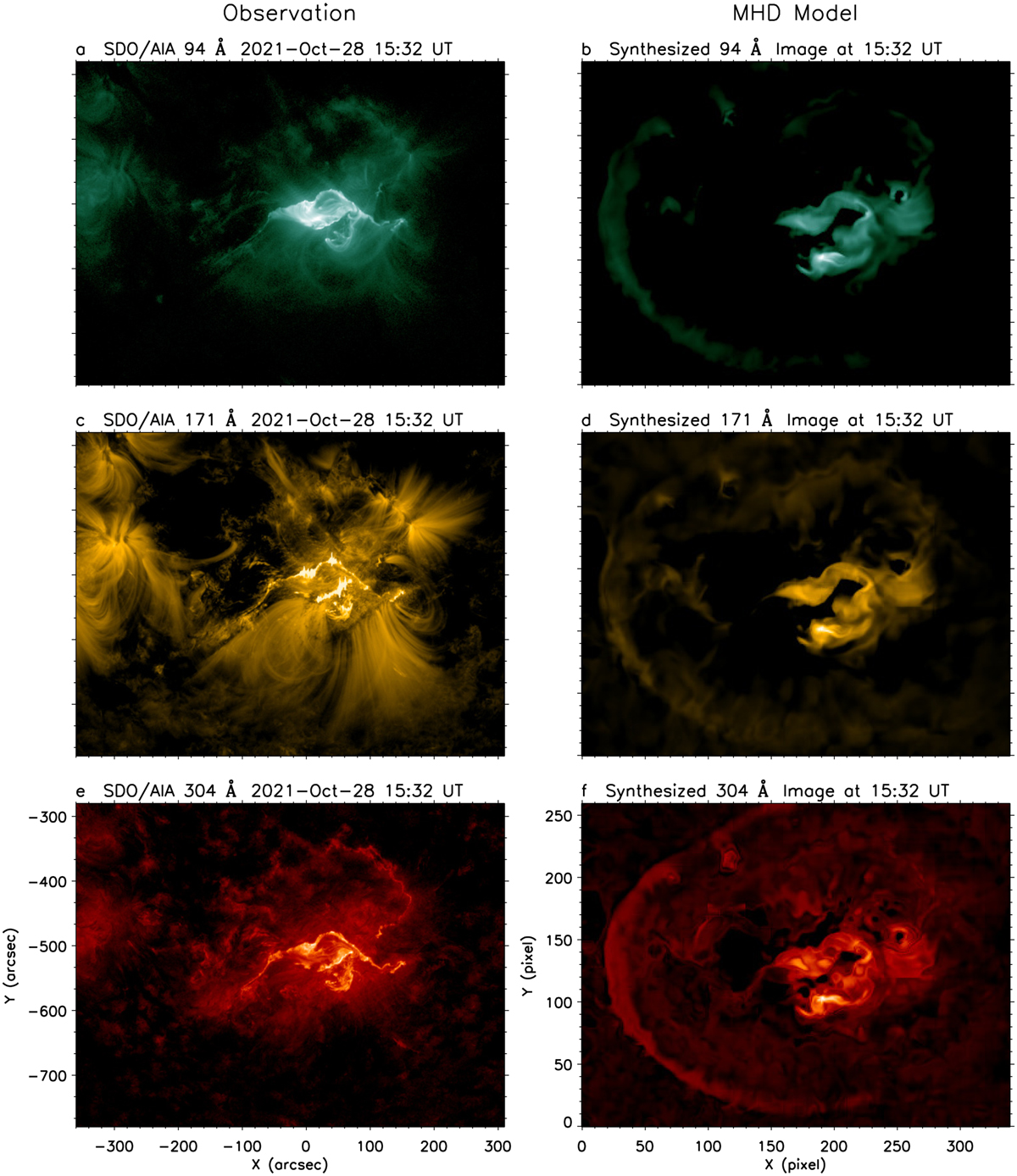

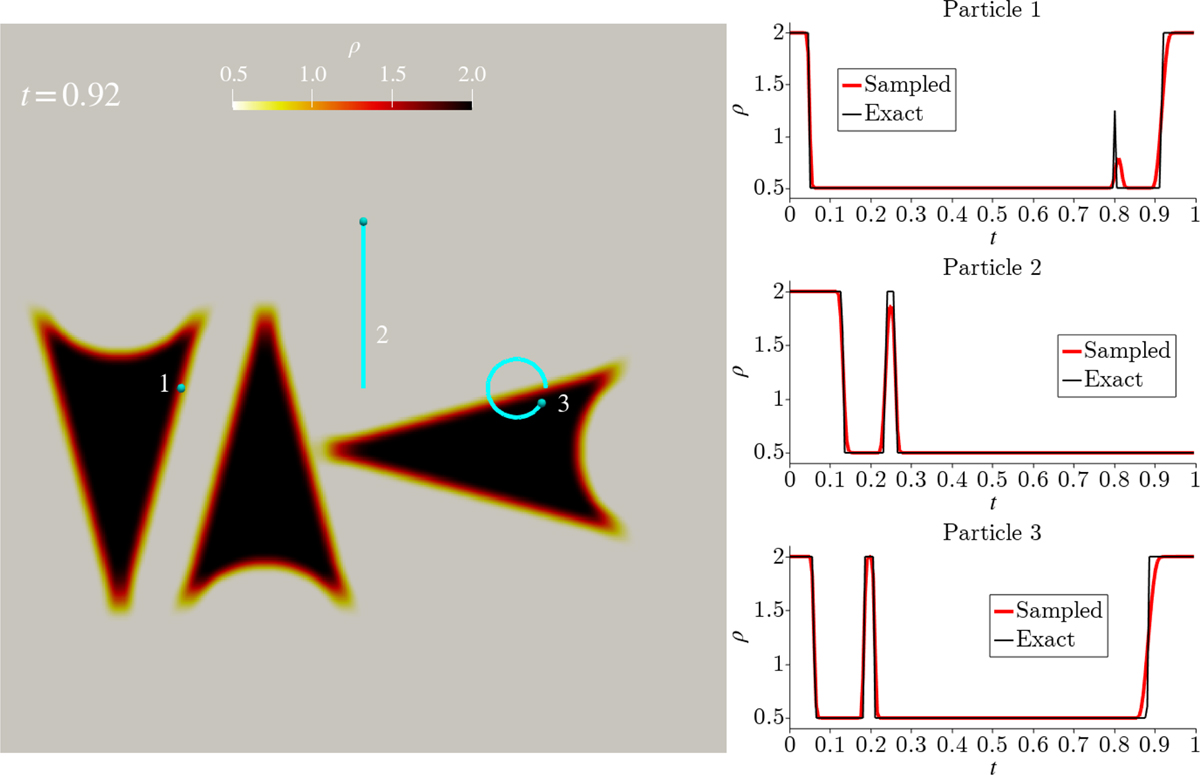

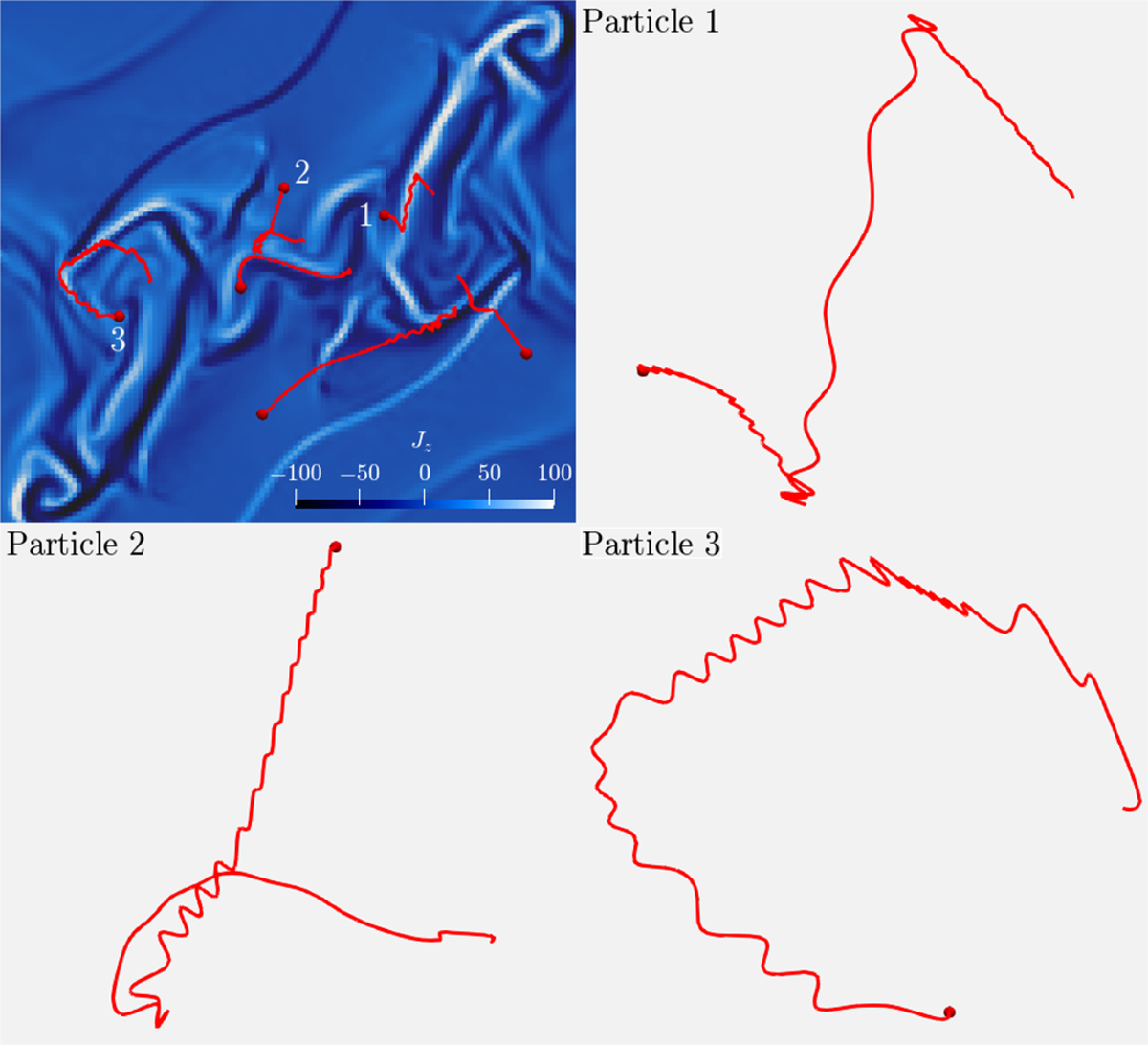

To validate the new MF module, we set up a test17, starting from a non-force-free bipolar twisted magnetic field (Mackay & van Ballegooijen 2001) to verify that the MF module can efficiently relax it to a force-free state. The magnetic field is given by